Google Reveals How They Keep Search Fresh and Relevant

Public Liaison for Search, Danny Sullivan, recently released an article explaining the process of implementing changes to the Google Algorithms. Providing an in-depth overview of how changes reflect in search gives us a further understanding of how these updates roll out for every query.

Moreover, it pretty much sums up how Google works as an informational vessel for users as Sullivan extends his article to a deeper analysis of SERP features like the knowledge graph, featured snippets, and predictive features.

“Our search algorithms are processed with complex math equations that rely on numerous variables, and last year alone, we made more than 3,200 changes to our search systems,” according to Danny Sullivan. The regular updates that the search engine uses in order to display relevant and useful results on the web are done with utmost and precise evaluation.

For SEOs, it is important to work with Google and not against them. I believe that the most useful white-hat tactic for SEO is following the search engine’s guidelines on their processes and algorithms. This is a great look into their process of displaying the search results and how they deal with potential issues that come with the SERP features. How does Google keep the search useful and relevant? See more of it below:

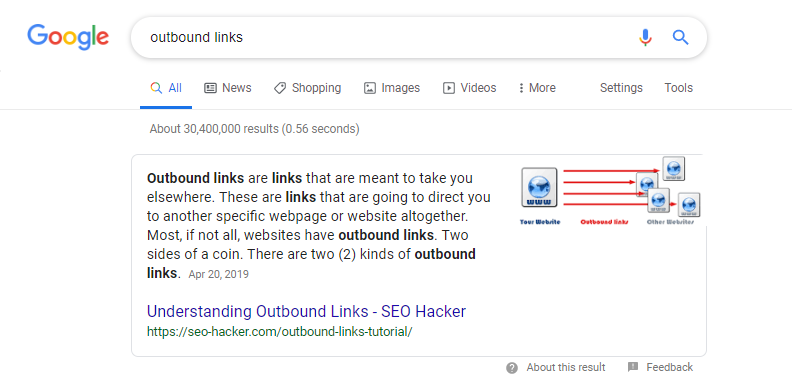

Utilizing Unique Formats

People can be visual in nature which is why it proves to be very helpful for them if they come across a search engine result in the form of featured snippets. As you may well know, featured snippets, or also popularly known as “Rank Zero”, determines that a particular web page passes Google’s standard both in quality and credibility. Appearing as a featured snippet is a signal of a strong web page that is most likely to be displayed in that unique format if it has undergone the best SEO.

Danny Sullivan reiterates that they prohibit snippets that are in potential danger of violating their policies like harmful, violent, hateful, sexually explicit or content that lacks an expert authority regarding public interest topics.

They did not mention a particular implementation which puts a page in the position of Rank Zero, but they did say that the removal of a featured snippet is only possible if it violates policies. So it is safe to say that as long as your content is relevant enough to serve the general public, then you are good to go.

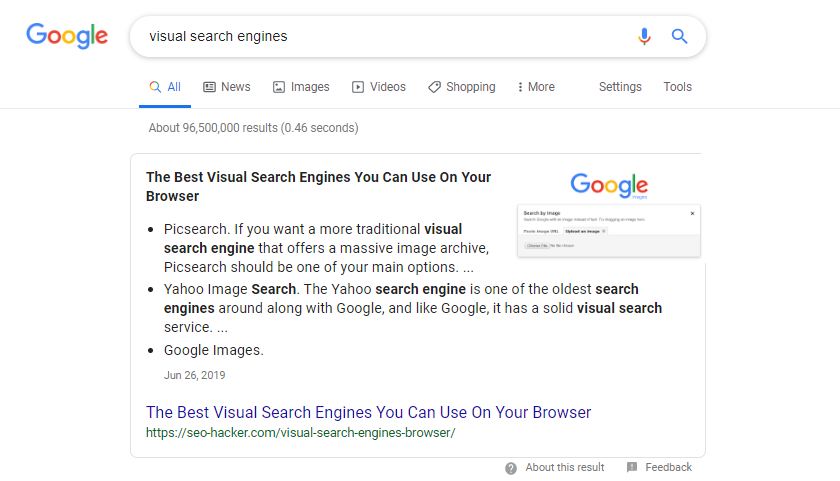

Providing Factual and Relevant Information

Google Search aims to reflect the facts that people readily search for on the web. No matter who, what, or where, the whole purpose of their algorithmic implementations is to provide answers that will sustain the queries that users input every day. The Knowledge Graph is their way to automatically connect the attributes of this information to structured databases, licenses data, and other informational sources.

Changes to the Knowledge Graph are rare since it is utilized to display results that are factual and properly presented as well. One of the processes that Sullivan explains in his article is the usage of manual verification and user feedback. Updating information in the event of an error is done manually especially if their systems have not self-corrected it.

Knowledge graphs can look as simple as this:

Or it can be displayed as a full-blown knowledge panel with a collection of the most viable answers to your query:

Google also gives people and organizations the freedom to edit their knowledge panels as they see fit. This process ensures that content authority is maintained and to pursue accuracy in representing people, places, things, or services. Additionally, Google has tools and developments that are specifically dedicated to fixing errors in Knowledge Graphs and other SERP features as well.

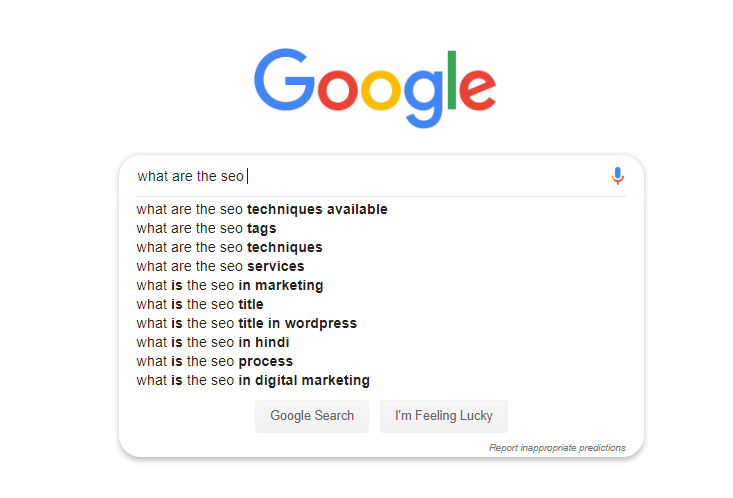

Maintaining A Personal Approach to Search

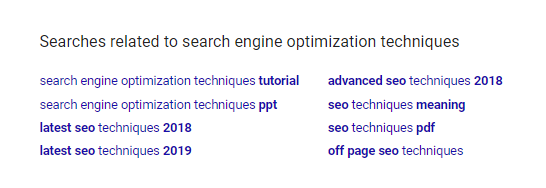

Predictive texts are everywhere and it is the closest and most basic form of AI that we can encounter in the digital world. Simply put, the predictive features help users navigate searches more quickly. Common searches help expand the topic scope, which will prove to be very useful for user experience and search for information. The section in the search labeled “People also search for,” will help users navigate to other topics that are related to what they are looking for in the first place.

Google maintains the freshness of the predictive feature by ensuring that the related searches would not have a negative impact on groups and this is also applied to results which can be offensive to the users. Possible predictions that are not included in this feature can be policy-violating content, either because it is reported and found to violate the policy or the system detected it as such.

Ranking Content by Relevance and Authority

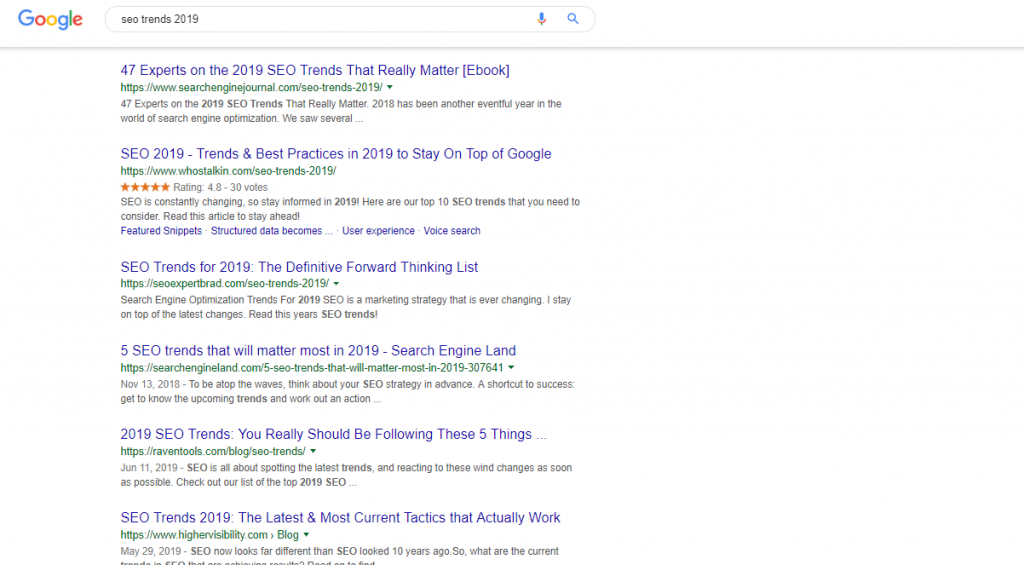

SERP features are great but you cannot deny that the most coveted spot in the search results pages is the organic result. Although the features are helpful enough, organic listings are what keeps people hooked into the search engine. The “blue links” are the lifeblood of search engines, businesses, and SEO organizations.

Ranking the results is an automated process, it sifts through a hundred billion pages that Google has indexed while crawling the web and it is arranged according to the relevance from the keywords you use.

Although Google’s ranking factors can be pretty vague, this recently published article made one thing clear: they do not manually remove or re-order organic results from the search engine page. The search engine does not manually intervene on a particular search query in order to “address ranking challenges”.

This is a bold statement considering that there are many people coming for Google who they think have a certain bias in the domains it ranks for the organic listings.

Eliminating Spam in Search

Spam protections are vital, especially if the content fails to meet Google’s long-standing webmaster guidelines. This is not limited to spam only since it can also cover violations that deal with malware and dangerous sites. The search engine’s spam protection systems are automated to prevent these types of content from being included in the ranking system. This is also applicable to links who are too spammy for their own good.

If those SEOs who think that using black-hat tactics are working in their favor, they would have to think again because Google sees these types. Manual actions are in order if the automated system fails to detect these dangerous content. It is also stated that this is not targeted to a particular search result or query, rather it is applicable to all general content that users can find in the SERPs.

Adherence to Legal Policies

The search pages are meant to disseminate information and to provide an avenue of safe browsing for everyone. Unfortunately, broad access to information also means that these content are vulnerable unsafe attacks like child abuse imagery and copyright infringement claims. These cases violate Google’s purpose of being a melting pot of information since it intervenes with people’s protection against these sensitive content.

Google’s legal compliance and application is their public commitment to keep people safe in order to help them strive to make search results and features useful to everyone.

Key Takeaway

Google’s transparency in the way their processes go about it is a win for SEOs. This will help us come up with ethical strategies with this type of information to keep in mind. Optimizing sites is not meant to be an overnight job. There is hard work behind strategies, applications, and research which means that there is no such thing as an outrage claim that your site can rank in just 24 hours.

Same with Google, they work hard to devise these features and to monitor the search engine pages to the best of their abilities. Rather than making an enemy out of Google, why not be its ally in providing users with the most relevant content and best experience? Read the full article here.

Do you think there are more areas that Google has to work on? Comment down below!