How to Fix Index Coverage Errors in Google Search Console

How do I fix Google indexing issues?

Quick Answer: There are many reasons why you might be experiencing indexing errors. Understanding what’s causing the issue can be done though Google Search Console’s Coverage report, and the correct fix depends on the errors you’re seeing. Following our guide below and the proper guidelines is a must. Then, you have to resubmit the fixed pages to re-indexing through Google Search Console. This will prompt Google to recrawl these pages—which (if fixed properly) will likely allow the pages to appear in the SERPs.

Overview

Indexing and crawling are two highly important processes for websites and search engines. For a website to appear in the search results, it must first be crawled by a search engine bot and then it will be queued for indexing. As an SEO, it is important that you get your website crawled and indexed and make sure that there are no errors that might affect how your website appears in the search results.

Google Search Console is the SEO community’s best friend. It allows us to submit our websites to Google and let them know of our website’s existence. It is the tool that allows us to see through the eyes of Google. We can immediately see what pages are being shown in the search results or if the changes or improvements we did have reflected.

One of the best things about the Google Search Console is that it shows us indexing errors that might negatively affect a website’s ranking. Search Console’s Coverage report will show you all the pages Google is indexing based on the sitemap you submitted as well as other pages not submitted in your sitemap but was crawled. Fixing errors to these pages is crucial.

An important page in your website that has an error would probably rank low if Google is finding a hard time crawling and indexing it. That is why it is crucial that you know what are the errors found in Search Console’s Index Coverage and know how to fix them.

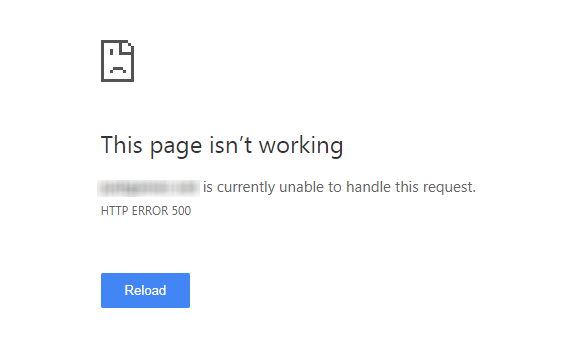

Server Error 5xx

5xx Errors happen when a website’s server can’t handle or process a request that was made by google-bot when it was crawling the page. Not only does this error cause problems with your website crawling, but your users are also having a hard time accessing your website too.

5xx Errors are usually caused by a problem with your server. It might be down, overloaded, or misconfigured. It could also be caused by a problem in your web site’s DNS configuration or content management system.

To fix this problem, it would be best to consult your web developer or check if your hosting has a problem.

Redirect Error

Redirects are normal to any website. It’s being used to redirect old pages or posts that may not be useful anymore to new ones. It could also be used to redirect URLs that are not found anymore.

URLs should only have a single 301 redirect. When a URL is redirected to another URL that is also redirected to another one, it creates a redirect chain and that is the usual problem that causes this error.

Redirect errors happen if a redirect chain is too long, it is a loop, it reached the maximum redirect limit (for Chrome it’s 20 redirects), or one of the URLs in the chain is empty.

Make sure that all your redirects are pointing to live URLs and only use a 301 redirect once to avoid redirect chains.

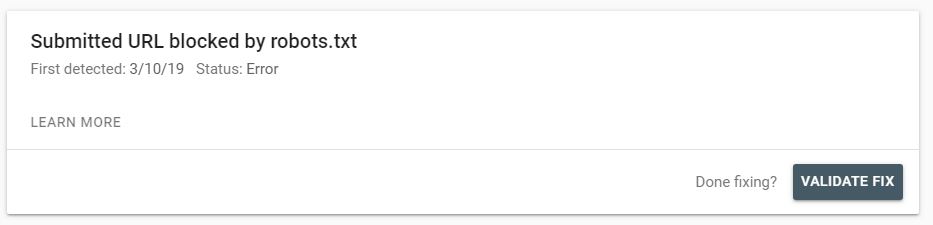

Submitted URL Blocked by Robots.txt

URLs that are submitted in your website’s sitemap indicates that these URLs are important and they must be crawled and indexed. If some of those URLs are also blocked in your robots.txt file, it will cause confusion for Google bot.

To fix this error, check first if the URLs you are blocking are important pages or not. If these pages are important and are accidentally blocked in your robots.txt file, simply update it and remove those URLs from the file. Make sure that those URLs are not blocked anymore using the robots.txt Tester at the old Google Search Console version.

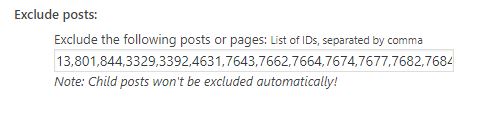

If you purposely blocked a URL that is submitted in your sitemap, remove that URL from your sitemap. If you’re using WordPress, get first the page or post number of the URL you are removing from the sitemap. To do that, go to Posts or Pages and click Edit to the post or page you want to remove. Check the URL bar and you will see the post ID.

Get that post ID and go to your sitemap settings. I’m using Google XML Sitemaps and I find it really easy to use. Under Excluded Items, you will find Excluded Posts. Enter the post ID of the one you want to exclude from the sitemap and click on save.

Submitted URL Marked ‘noindex’

This error is similar to the Submitted URL blocked by Robots.txt Error. Since a URL submitted in the sitemap means you want Google to index it, placing a ‘noindex’ tag to it makes no sense.

Check if those URLs are important pages. Placing a ‘noindex’ tag means you don’t want Google to show these pages in the search results. If a product or landing page has an accidental ‘noindex’ tag, then that is bad news for you.

If the URLs under the error are not important anymore, remove them from the sitemap similar to how I mentioned it above.

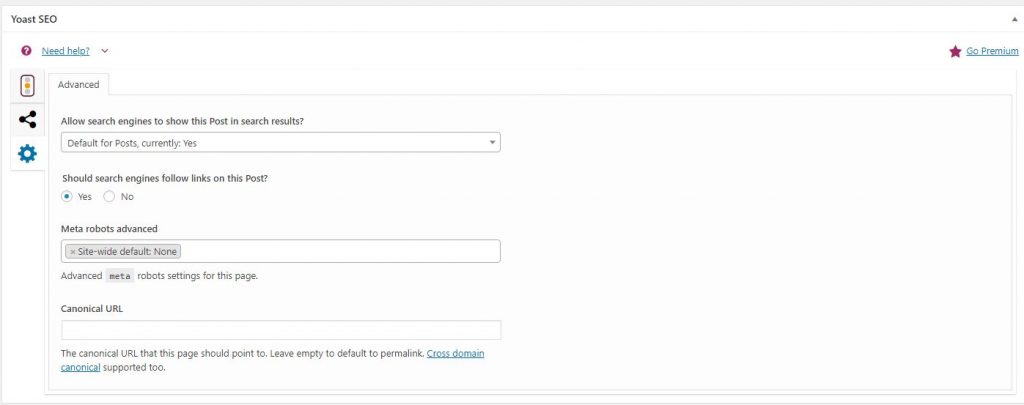

If the URLs are important, remove the noindex tag from them. If you’re using Yoast SEO, go to the Page or Post that is tagged as noindex. Scroll down until you see the Yoast SEO box and click on the Gear Icon.

Make sure that the option under Allow Search Engines to Show this Post in Search Results? Should be “Yes”.

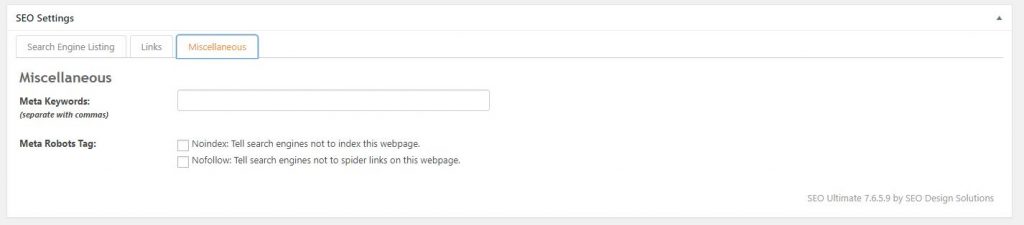

If you’re using SEO Ultimate, the process is similar to it. Go to the post or page then scroll down to the SEO Ultimate box. Under Miscellaneous make sure that the “Noindex” box is unchecked.

Submitted URL Seems to be a Soft 404

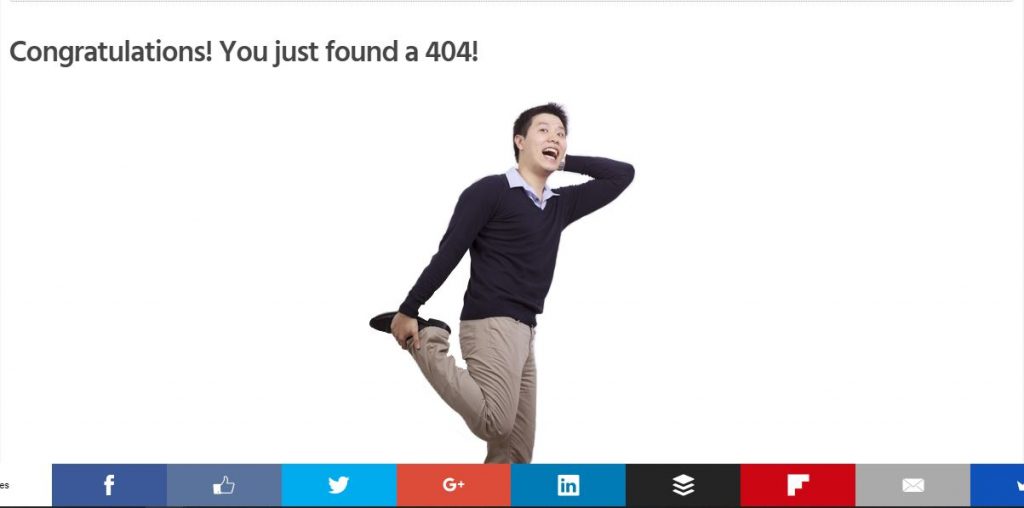

A soft 404 error means a URL that is submitted in your sitemap is no longer existing but is returning as a success or code 200. Soft 404 errors are both bad for users and the google-bot.

Since it is still considered a page, users might see this page on the search results but all they will see is a blank page. At the same time, it will waste your crawl budget.

Check the URLs that Google considers as soft 404. If those pages were deleted or non-existent, make sure that they return a 404 (not found) error. But if they are still relevant, use a 301 redirect to a live page.

A 401 Error happens when a submitted URL is going to be crawled by Google but it deemed Google unauthorized. This usually happens when webmasters place security measures for other bad bots or spammers. To fix this error, you need to run a DNS lookup and verify Googlebot.

Submitted URL Not Found (404)

A page that is returning a 404 error means that the page is deleted or not existing. Most of the time, if you delete a post or a page, it is automatically removed from the sitemap. However, some errors might happen and a URL that is deleted might still be found on your sitemap.

If that page is still existing but has moved to another page, then doing a 301 redirect would fix the error. For content that is deleted permanently, then leaving it as 404 is not a problem.

Take note that redirecting 404s to the homepage or other pages that are not related to it could be problematic for both users and Google.

Submitted URL has crawl Issue

Submitted URLs that are under this error means there is an unspecified error that does not fall in the other mentioned errors that stop Google from crawling the URL.

Use the URL Inspection tool to get further information on how Google sees that web page and make improvements from there.

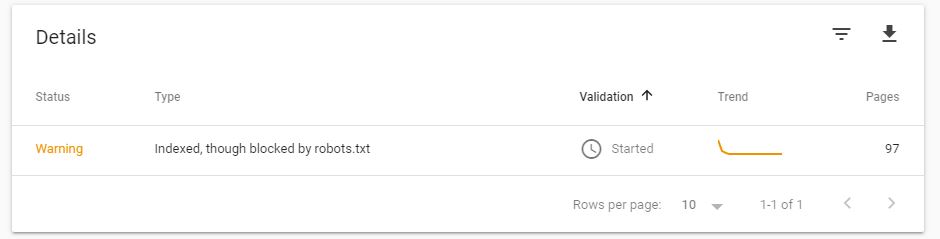

Warning: Indexed Though Blocked by Robots.txt

This is not an Error but a Warning. It is the only category that falls under the Warning tab of the Coverage report. This happens when a URL is blocked by Robots.txt is still being indexed by Google.

Usually, Google respects the Robots.txt but when a URL that is disallowed is linked to internally, Google could still crawl that disallowed URL.

The noindex tag and robots.txt file have very different uses. There are still some confusions between them. If it is your intention to remove these URLs from the search results, remove them from the robots.txt file so Google could crawl the ‘noindex’ tag on them. The robots.txt file is more used to control your crawl budget.

Key Takeaway

Checking for errors in your Search Console should be a part of your SEO routine. Always optimize all your pages. Make sure that these pages can be crawled and indexed by Google with no problem at all. While submitting a sitemap does not directly affect your rankings, it will help Google notify you of any errors that might negatively impact your website’s rankings.