How to Measure AEO Performance: Key Metrics and Strategies for the AI-Driven Search Era

As search engines evolve into AI-driven search ecosystems, the old ways of measuring performance are starting to fall short. Metrics like clicks and impressions only show part of the story. They don’t reveal how your content actually shows up, educates, or engages within AI-generated results.

That’s where Answer Engine Optimization (AEO) comes in. AEO shifts the focus from surface-level stats to a deeper understanding of how your brand appears and is referenced in AI summaries, chat-based answers, and contextual responses. It’s a new way to evaluate visibility and influence in a world where generative search is rewriting the rules.

The real question today isn’t “Do I rank?” — it’s “am I seen and cited by AI” This layer of search is fluid and unpredictable; mentions can appear or vanish depending on small changes in user intent, phrasing, or the model’s retrieval logic. Mastering AEO means learning to navigate that shifting terrain and ensuring your brand stays visible within it.

Author’s Note:

This article is the eighth entry in my AEO/GEO series, which explores how websites can keep up with new search ecosystems. If you’re new to the series, I recommend starting with the earlier pieces to understand how AI-driven retrieval, synthesis, and citation are reshaping the fundamentals of SEO.

Catch up on the series:

-

How AI Overviews Impact CTR and SEO – How Google’s AI-generated results are changing click behavior and rankings.

- How Generative AI Is Changing Search Behavior – Why people search differently in the age of AI and what it means for your strategy.

-

Mapping Content to User Goals – How to align structure and messaging with user intent to improve engagement.

-

How Generative AI in Search Works – A breakdown of how large language models (LLMs) retrieve, rank, and synthesize information.

-

Structuring Content for AI Extraction – A practical guide on formatting content so AI systems can easily parse and reuse it.

-

Using Authority Signals and Schema Markup for AEO Success – How structured data and expert attributions help AI trust and cite your content.

-

How to Structure Content for Multi-Turn Conversations in AI Search – How to design and organize your content so it remains relevant and referenced across multi-turn AI interactions.

Moving Beyond Traditional SEO Metrics

Search is no longer just about ranking on page one. It is now about being part of the answer. Answer Engine Optimization, or AEO, takes optimization a step further by making sure that your content is not just for improving search engine visibility, but for inclusion in AI-generated answers that directly respond to user queries as well.

Also, with AI-driven search experiences now, the search journey has transformed: users are no longer just searching, they are conversing. These platforms then curate and deliver quick, summarized, and context-rich responses directly within the results without always requiring a click to visit a website.

Visibility now means more than appearing in search listings. It’s about being cited and recognized by the very AI models shaping what users see and trust, and these have become just as valuable as traditional organic rankings, signaling a shift in how we define engagement and discoverability.

As search becomes more intelligent, success now is not measured by clicks alone. A website may experience fewer visits, yet achieve greater reach and influence by being referenced or featured within AI answers.

To understand how to measure AEO success, we need to recognize the limitations of current analytics, define the right metrics, and track how our content appears, contributes, and delivers value across the AI-powered search ecosystem.

The Limitations of Click-Based Metrics

Generative search experiences are is reshaping how people look for and receive information. Users now receive AI-generated answers instantly, often without visiting a website. As a result, traditional organic funnels are showing fewer clicks, even when overall visibility and influence may be increasing.

A decline in traffic doesn’t necessarily mean a decline in reach. Content that is cited, summarized, or referenced within AI-generated responses still reaches a wide audience. These mentions strengthen brand authority and awareness, even among users who never leave the search results.

Current analytics platforms are not yet equipped to measure this new layer of visibility. Google Analytics can track traffic from search, but it cannot distinguish between a visit from a traditional link and one originating from an AI Overview. Google Search Console provides impressions and clicks but offers no insight into whether your content was used to inform a generative summary.

This presents two key challenges. First, the generative answer layer remains invisible to most analytics tools. Second, even when visibility can be detected, it is often unstable — a query that cites your content today may produce different results tomorrow, even without changes to your site.

For SEO and AEO professionals, the next step is to develop new measurement frameworks that uncover this hidden layer, track performance over time, and connect these appearances to meaningful business outcomes such as engagement, brand recognition, and trust.

AEO Performance Indicators

Measuring AEO success requires a wider lens than traditional SEO metrics can provide. Clicks and impressions alone no longer capture the full story of visibility in AI-driven search. To understand how to measure AEO performance, brands need to track new indicators that reveal how content performs beyond the click.

AI Impressions

AI impressions reflect how often your content or brand appears within AI-generated summaries or conversational responses, even when it isn’t directly linked or quoted. They are similar to traditional SEO impressions, but with a crucial difference: they measure how frequently your content becomes part of the AI-generated answer itself.

This metric highlights when your content is recognized and surfaced by AI systems, boosting brand exposure even without a click. Each mention within an AI Overview or generative search result signals that your content is being treated as a reliable and contextually relevant source, trusted by both users and the AI models delivering the results.

Tracking AI impressions is still an emerging practice as generative search technology continues to evolve.

However, there are a few practical ways to start gathering insights:

- Monitor visibility within AI overviews through early access tools or beta reports, such as Google Search Console’s SGE experiments (when available).

- Use third-party SEO platforms that are beginning to introduce generative search tracking, detecting when your content appears in AI summaries or overviews.

- Set up brand and content mention monitoring to identify instances where AI systems reference or summarize your content across different platforms.

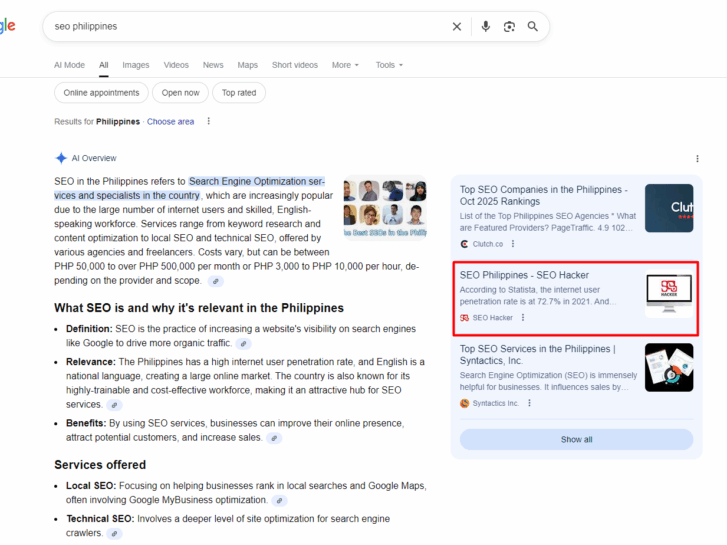

Understanding where your content surfaces requires a closer look at how AI search interfaces work. AI Overviews usually appear as embedded panels within a standard search results page, triggered for queries where Google determines a synthesized answer would be useful.

Within it, you can search for anchor tags pointing to your domain or textual overlaps with your content. However, because these panels change dynamically with user context, model updates, and testing conditions, a single spot-check provides only a snapshot. Long-term tracking is necessary to build an accurate picture of your visibility trends.

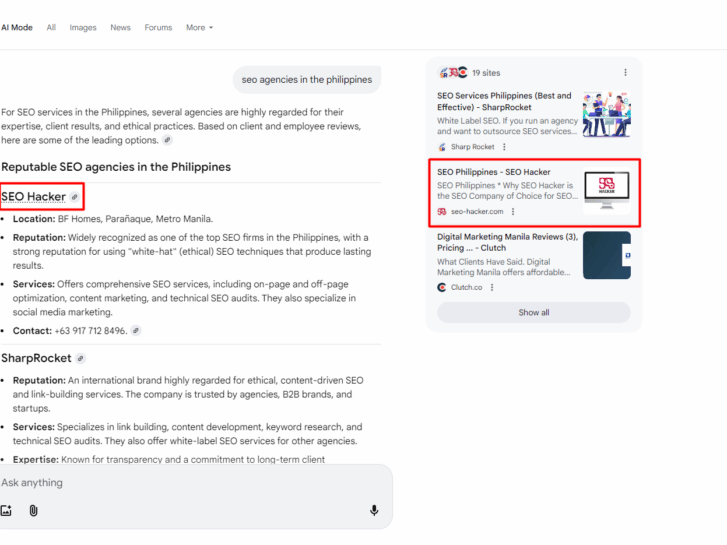

Meanwhile, AI Mode introduces a more conversational environment. Unlike static summaries, it generates multi-turn responses designed to engage users in dialogue. As a result, the retrieval patterns are broader and more reasoning-driven, often drawing from different sources than AI Overviews.

Measuring your presence here requires capturing the entire conversational output and identifying every linked or referenced source. Comparing results between AI Overviews and AI Mode can reveal content biases, preferred sources, and topic coverage gaps that influence how often your content is selected.

A practical way to quantify your visibility is through AI share of voice. For instance, if you track 100 keywords, and find that AI Overviews appear in 25 of them, and your content is featured in 10, your AI share of voice is 10%.

This metric establishes a baseline for understanding how frequently you appear across generative search experiences. Over time, tracking this percentage helps measure the impact of your optimization efforts and identify opportunities to strengthen your presence within the evolving AI-driven search ecosystem.

Summary Inclusions

Summary inclusions highlight how often your brand or content is directly cited within AI-generated answers, whether in Google’s AI Overviews or other generative platforms. It serves as a measure of authority, relevance, and credibility, showing that AI not only recognizes your content but also trusts it enough to include it in its response.

The more frequently your brand is referenced in these summaries, the stronger your visibility and trust become in the eyes of both algorithms and users.

Monitoring summary inclusions provides valuable insight into how effectively your content aligns with what AI deems useful and reliable.

However, tracking for AI overviews and on AI mode is primarily manual for now, as most analytics platforms are still adapting to AI-driven search reporting. Some practical ways to monitor when your content is being cited or referenced in AI-generated summaries include:

- Manually monitor AI-generated results for your target keywords using tools like Google’s SGE or Bing Copilot to see if your content is cited or linked in summaries.

- Use third-party tracking tools that are beginning to offer generative search visibility features, which detect brand mentions or links in AI overviews.

- Set up brand and URL mention alerts through platforms like Google Alerts or Mention to capture instances where AI-generated content references your site. Though these tools don’t yet directly track AI results, they can still monitor where and how your brand or content is being referenced online, which can indirectly indicate when AI systems are pulling information from your site.

- Document and compare appearances over time to identify trends in how frequently and where your content is being featured in AI answers.

Conversational Engagement

Conversational engagement is another AI performance indicator that reflects how actively users interact with your brand within AI-powered chat experiences.

Rather than simply appearing in an AI-generated summary, this metric measures the depth of interaction: how often users mention your brand, ask follow-up questions, or continue queries related to your offerings.

And in tracking metrics for conversational engagement, here are a few effective ways to monitor how users interact with your brand within AI chat environments:

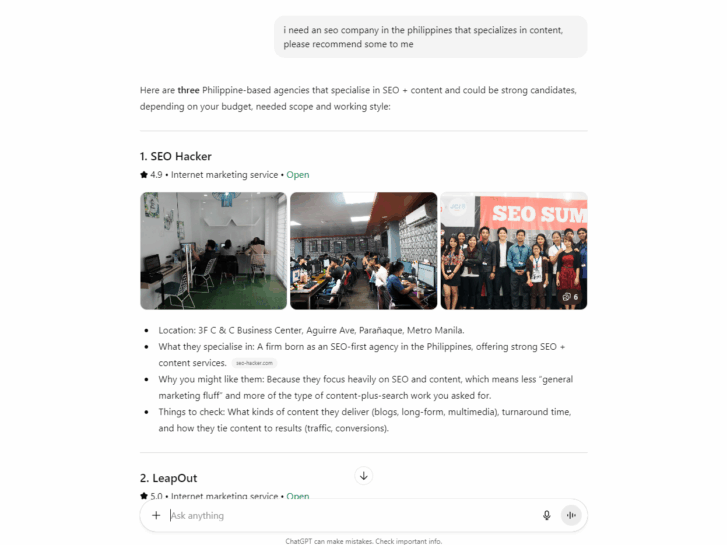

- Track branded follow-up prompts. Observe how often users continue the conversation with additional questions or prompts that mention your brand. This is done by manually testing AI chat interfaces (like Google’s SGE or ChatGPT) using your key topics or brand-related queries, then noting if the AI generates follow-up suggestions or if your brand reappears in subsequent dialogue threads.

- Monitor repeated mentions in AI chat threads. Identify instances where your brand or content is referenced multiple times throughout a single AI interaction. You may test queries around your target keywords and observe whether the AI continues to cite or mention your brand across follow-up responses. Consistent repetition indicates stronger brand association and relevance within conversational contexts.

- Analyze rephrased or expanded branded queries. Look for users who refine or restate their questions involving your brand, showing deeper curiosity or intent. Experiment with variations of branded queries in AI chat interfaces and note if the system continues to associate your brand with related topics or reintroduces it in expanded answers.

Take this exchange I had with ChatGPT as an example:

SEO Hacker was mentioned in both the first and second answer, which means that we are possibly getting multiple brand mentions even throughout follow-up prompts from users.

Dwell Time and Content Interaction

Dwell Time and content interaction measure what happens after visibility: how long users stay and engage once they reach your content through AI-driven results. Even in an AI-first search environment, time spent reading or interacting with your page remains a powerful indicator of relevance and satisfaction.

In hybrid search experiences where users discover information through both SERPs and AI overviews, higher dwell time signals that your content not only earned visibility but also fulfilled user intent — proving its depth, usefulness, and credibility in the moments that matter most.

While AI platforms don’t yet provide direct analytics, there are ways to measure these AI performance indicators:

- Use Google Analytics or GA4. Monitor average engagement time and scroll depth for pages frequently surfaced in AI results.

- Track referral sources. Identify sessions that originate from AI-driven search experiences (like Google’s SGE or Bing Copilot) if labeled in traffic sources.

- Analyze session duration trends. Look for increases in on-page time or interactions (clicks, video plays, form fills) on content recently appearing in AI overviews.

- Observe behavioral flow reports. See whether users explore additional pages after landing on your site, which may indicate that AI-driven visitors find the content relevant and worth exploring.

These insights help you gauge whether your content is simply being seen, or genuinely engaging users who arrive through AI-enhanced search experiences.

AI Bot Activity

One of the most overlooked signals of visibility is bot activity. Tracking how often AI-related crawlers visit your site can help you understand how frequently your content is being indexed, retrieved, or evaluated for use in generative search results.

Bots such as ChatGPT-User, ClaudeBot, or PerplexityBot regularly scan or request web pages to collect information or serve user queries. A consistent crawl rate typically indicates healthy visibility, while a sudden decline could mean your site has been deprioritized or excluded from certain retrieval pipelines.

By reviewing server logs or bot analytics, you can spot potential issues before they show up in your downstream performance metrics.

Common AI Bots to Track

Below is a summary of major AI crawlers, what they do, and how to manage their access:

| Company / Platform | Bot or User Agent | Primary Function | How to Manage Access |

| OpenAI | GPTBot | Gathers data from publicly available pages to train and improve OpenAI models. | Add rules for User-agent: GPTBot in your robots.txt file to allow or block access. |

| OAI-SearchBot | Collects and previews content to power search and link features in ChatGPT; not used for training. | Manage via User-agent: OAI-SearchBot in robots.txt. | |

| ChatGPT-User | Fetches content in real time when a ChatGPT user or Custom GPT requests a web page. | Same management method via robots.txt. | |

| Anthropic (Claude) | ClaudeBot | Crawls web content to help improve Claude’s knowledge base and training data. | Control access using User-agent: ClaudeBot in robots.txt. |

| Perplexity AI | PerplexityBot | Indexes web pages to power Perplexity’s AI answer engine. | Rules for User-agent: PerplexityBot can be set in robots.txt. |

| Google (Gemini / AI Overviews) | Google-Extended | Acts as an opt-out flag indicating whether content can be used in AI training or enhanced features. | Declared in robots.txt as User-agent: Google-Extended. |

| Googlebot Family | Core crawlers that index content for Search, Images, Video, and News; also supply data to AI-driven results. | Manage using the specific Googlebot names (e.g., User-agent: Googlebot). | |

| Microsoft / Bing / Copilot | bingbot | Main Bing crawler whose indexed content supports both Search and Copilot experiences. | Configure permissions for User-agent: bingbot. |

| Meta (Facebook / Instagram) | FacebookBot, facebookexternalhit, meta-externalagent | Used primarily for generating link previews; may also inform AI models in limited ways. | Permissions can be set using the listed user-agent strings. |

| ByteDance (TikTok / CapCut / Toutiao) | Bytespider | General-purpose crawler that indexes public content, sometimes feeding TikTok’s AI features. | Manage via User-agent: Bytespider in robots.txt. |

How to Measure AEO Performance

Understanding AEO performance indicators is only the first step. The real impact comes from turning insights into measurable action, which requires a structured approach.

Tracking key metrics such as AI impressions, summary inclusions, conversational engagement, and dwell time involves creating dashboards that will give you a clear picture of how your content performs in AI-driven search environments.

Identify Key AEO Metrics

Start by determining which performance indicators matter most for your goals. Focus on metrics such as AI impressions, summary inclusions, conversational engagement, and dwell time. These metrics capture your overall AI-driven search presence.

Combine Active and Passive Tracking Methods

Measuring performance in Answer Engine Optimization (AEO) requires using both active and passive tracking approaches. Together, they help capture the full spectrum of visibility, engagement, and authority signals that reflect how your content performs within AI-driven search environments.

Active tracking involves hands-on observation of where and how your content appears within generative search results.

This can include running your target keywords in tools such as Google’s Search Generative Experience (SGE), documenting when your brand or pages are mentioned, and testing branded variations of your queries to identify follow-up prompts and conversational references.

Regular testing helps uncover new opportunities and spot shifts in AI-driven ranking behavior.

Passive tracking, by contrast, collects data automatically through system logs and analytics platforms.

This approach reveals how users and AI systems interact with your content behind the scenes. By analyzing server logs, you can see when AI crawlers fetch your pages, how frequently they return, and whether those visits change over time.

Combined with analytics data—such as dwell time, engagement rates, and interaction patterns—passive tracking gives you a deeper view of how your content performs once it’s surfaced by AI systems.

Modern SEO and analytics tools are beginning to offer specialized features for AEO tracking. Platforms like SE Ranking’s AI Search Toolkit can help you:

- Monitor when and where AI tools mention your brand or link to your pages.

- Identify visibility gaps and opportunities across multiple AI search engines.

- Compare your domain’s inclusion rate and brand mentions against competitors.

- Review how AI-generated answers reference your content for specific prompts.

- Evaluate and benchmark overall AI search visibility across multiple domains.

Build the Dashboard Framework

Organize your collected data into a dashboard that brings together all key AEO metrics such as AI impressions, summary inclusions, conversational engagement, and dwell time. This framework makes it easier to identify patterns, track trends, and compare performance across different indicators.

A well-structured dashboard turns raw data into actionable insights, helping you make informed decisions to optimize your content for AI-driven search.

To enrich your dashboard with deeper insight, consider integrating additional data sources and monitoring methods:

- Clickstream data from trusted third-party providers can help approximate visibility by observing user behavior across the broader web. Monitoring AEO-affected queries in this way allows you to estimate click-through rates (CTR) and identify where your content is likely being cited or surfaced in AI responses.

- Server log analysis offers visibility into how AI bots interact with your site. By filtering logs for known AI user agents, you can measure crawl frequency and detect any drops or spikes that may signal changes in retrieval or ranking behavior.

- Direct monitoring provides the most accurate view of your presence in generative search. Using browser automation frameworks can automate your target queries, capture full generative outputs, and extract citations from AI-generated responses. Repeating this process regularly creates a longitudinal dataset that tracks how your inclusion in AI results evolves over time.

Creating an integrated dashboard helps you connect technical data with real performance results. Over time, it becomes your main hub for tracking AEO, making it easier to measure impact, spot changes in AI behavior, and keep improving your optimization strategy.

Visualize and Analyze Data

Use charts, tables, and trend lines to interpret your AEO metrics clearly and effectively. Visualizing relationships will help you which content truly resonates with users.

Analyzing these patterns allows you to identify high-performing content, spot opportunities for improvement, and make data-driven decisions that enhance visibility, authority, and engagement within AI-powered search environments.

Optimize Based on Insights

Finally, use your dashboard to refine strategies. Identify content gaps, enhance authority, and adjust messaging to improve visibility and engagement across AI-driven platforms. This approach bridges the gap between theory and practice, transforming abstract AEO concepts into measurable actions that strengthen your brand’s presence in the AI-driven search landscape.

Repeated Tracking

Tracking share of voice in AEO is more complex than it is in traditional search because there’s no fixed results page to measure. In classic SEO, rankings were stable enough to scrape and compare over time. You could identify changes tied to updates or competitors with relative confidence.

In generative search, however, results are dynamic. The same query can return different answers from one test to the next, even under identical conditions. The AI’s retrieval and synthesis processes constantly shift what’s displayed based on random sampling, evolving index data, and personalization signals.

Because of this, share of voice can no longer be viewed as a static percentage of rankings held—it’s better understood as a probability distribution of visibility over multiple observations. Measuring it effectively means running repeated tests, aggregating the results, and looking for patterns in how often and where your brand appears.

Repeated tracking also helps validate and strengthen other AEO metrics. For example:

- It provides context for AI impressions, showing how often your content is surfaced over time rather than in a single instance.

- It clarifies fluctuations in AI citations or mentions, revealing whether they are temporary or part of a longer trend.

- It supports dashboard-level insights, connecting short-term volatility with long-term performance averages.

By combining repeated tracking with your dashboard metrics—such as impressions, inclusion rates, conversational engagement, and crawl frequency—you can develop a more accurate picture of your brand’s true presence within generative search. This ongoing, iterative approach ensures you’re measuring visibility as a living system rather than a single static result.

Interpreting Answer Engine Analytics

The data you track in your AEO dashboard provides a framework for understanding how to measure AEO performance beyond traditional metrics. When your brand appears in AI-generated summaries or when dwell time on linked pages increases, it signals that your content is both relevant and trusted by AI systems.

AI favors authoritative, well-structured, and semantically rich content, so tracking which pages are cited or surfaced helps reveal what performs best. These insights can guide improvements in content structure, topic depth, and schema optimization, ensuring your brand earns not only visibility but also authority and engagement.

Connecting AI visibility to outcomes like traffic, conversions, and revenue brings the full picture into focus. In Google Analytics, start by segmenting landing pages tied to queries that trigger AI panels. If traffic declines while conversions hold steady, your content may be capturing only the most intent-driven users.

Even without clicks, AI citations still drive value. Mentions in authoritative answers can increase branded search and direct visits over time. Tracking these assist signals helps quantify how generative visibility contributes to broader brand lift and long-term growth.

Key Takeaway

Success in AEO goes beyond clicks and rankings. It’s about being seen, cited, and trusted within the AI layer of search, where users engage directly with generative results. True performance is measured not just by traffic, but by visibility, authority, and meaningful engagement across AI-driven platforms.

To measure AEO performance effectively, focus on AI-native KPIs such as AI impressions, summary inclusions, conversational engagement, and dwell time. These metrics reflect how your content participates in the