Google Updates The Search Console Index Coverage Report

Google recently updated the Index Coverage report in search console. They’re releasing 4 major changes in the Index Coverage report that will help webmasters have a more thorough and deeper understanding of how Google crawls or indexes their website. These updates have been due to the feedback that webmasters gave Google to further refine and improve the report. That said, let’s find out what the changes are and they can help SEO.

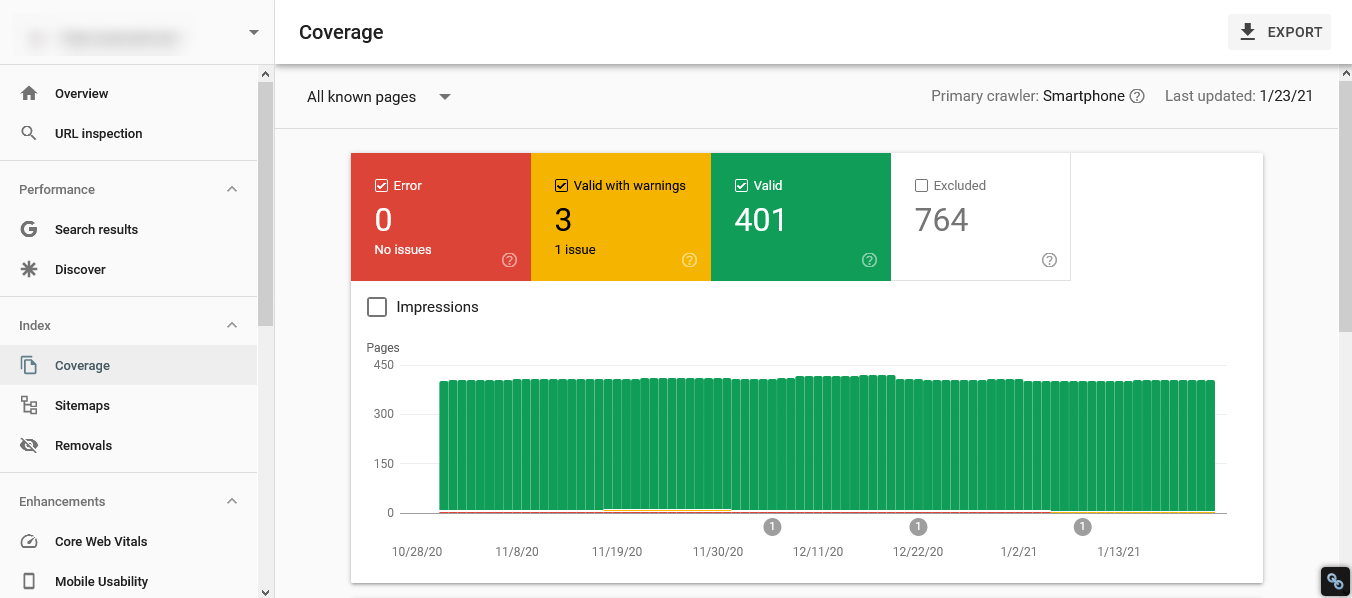

Google Search Console Index Coverage Report

The Index Coverage was introduced during the roll-out of the revamped Google Search Console along with other features. So, unlike other updated Google tools, the Index Coverage report is a relatively new feature that just had its upgrade. But along with Google’s constant updates, their tools, and other features need to get updated as well to better help us webmasters and SEOs bring the best possible results for the users. Here is the update:

Index Coverage Report Updates

According to Google’s recent blog post, they had 4 updates for the Index Coverage report. Based on the blog post the updates include:

- Removal of the generic “crawl anomaly” issue type

- Pages that were submitted but blocked by robots.txt and got indexed are now reported as “indexed but blocked” (warning) instead of “submitted but blocked” (error)

- Addition of a new issue: “indexed without content” (warning)

- Soft 404 reporting is now more accurate

Let’s run through the important updates one by one.

Removal of the broad “Crawl Anomaly” Issue

This is one of the most important updates because ever since the Index Coverage Report was introduced, the crawl anomaly was one of the most difficult errors to fix. The reason behind this is because the error was not specified at all, so we had to change small but important factors that affected crawling that might have contributed to the crawl anomaly issue and wait if the small factor was in fact the reason behind the anomaly.

But now that the “crawl anomaly” issue has been removed, the specific error that caused the anomaly will now be specified to help webmasters and SEOs fix the anomaly faster.

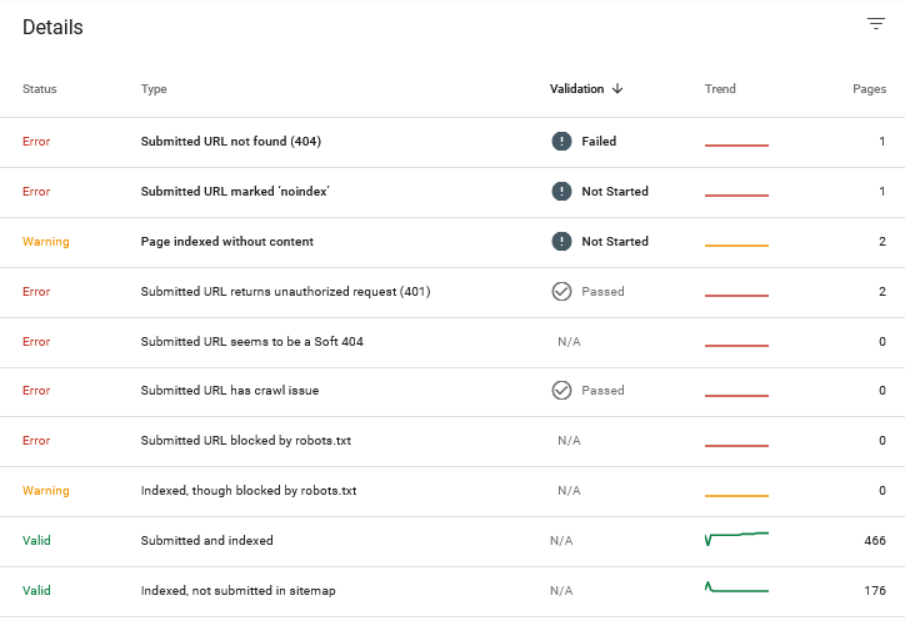

In the screenshot above, the “Crawl Anomaly” issue that we always saw has been replaced by different errors. Since we now know the specific errors instead of the broad “crawl anomaly”, we can get to fix this immediately.

Indexed but Blocked (Warning)

This is a pretty simple update where webmasters can have more clarity with regard to the status of the URLs they submit since there is a clear difference between a page that has been submitted and a page that was indexed after submission.

But what’s important here is to note that there is an indexed page that Google can’t crawl anymore because it’s being blocked by your robots.txt file. So, fix this immediately. Either remove the page in your robots.txt file or have it deindexed by Google if you don’t want it getting crawled and showing up in the search results.

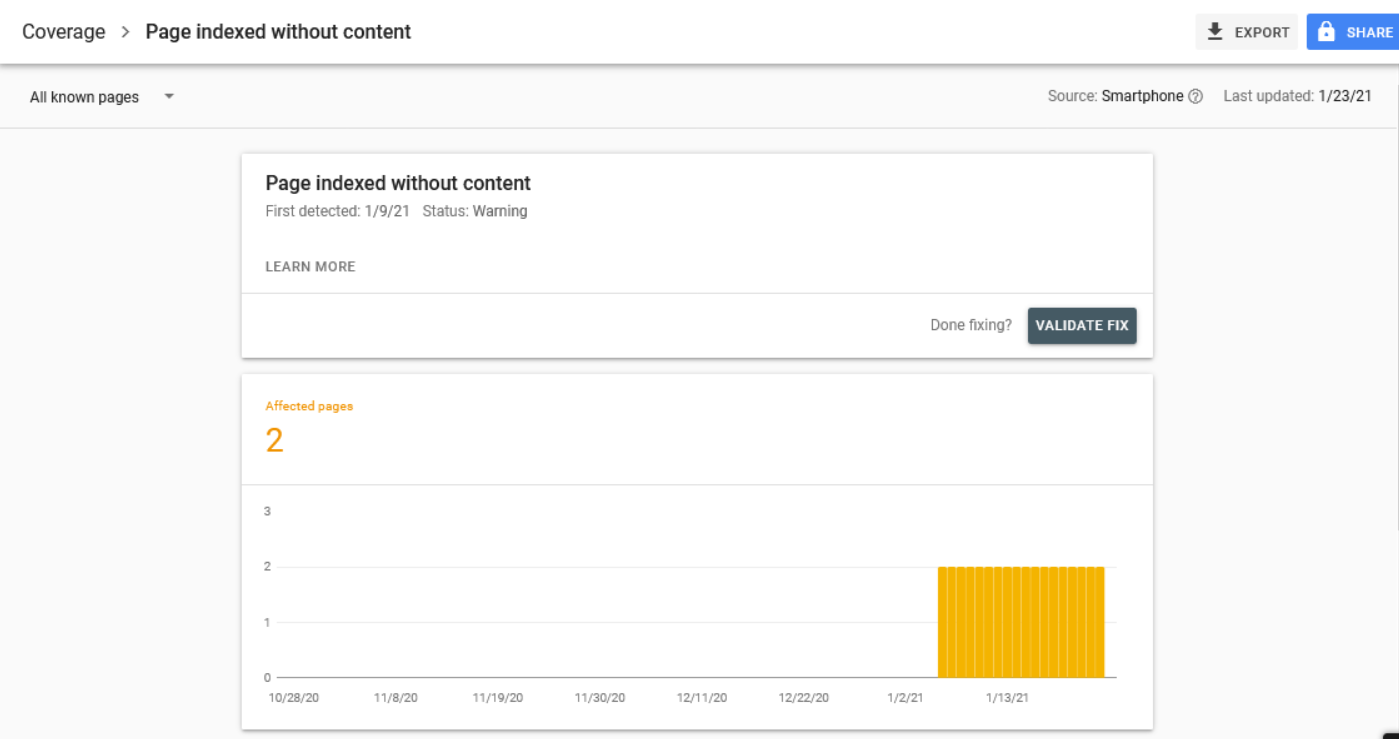

Indexed Without Content

This is a new type of error that Google has introduced. This error is basically Google telling you that they have crawled and indexed your page but they can’t see the contents of the page. There are three possibilities why this is happening:

- You’ve published a blank page – Remove or update this page immediately since you’re just wasting your crawl budget having this be crawlable and indexable.

- You’re Cloaking the page – Either intentional or not, do not do this. Cloaking your page from Google is a black hat tactic that could lead to penalties to your site.

- Your Page’s Format is not supported by Google – Use the URL Inspection Tool to check how Google sees/views your page and find out why they’re not seeing the content of your page.

Here’s what the warning looks like:

When you scroll down on the report, you’ll be able to see which pages are tagged with this error and I suggest you fix it immediately.

Key Takeaway

It might not sound like a major update, and that’s because it isn’t. But it is a helpful one for webmasters and SEOs. Having the knowledge of which pages have specific errors can help us save time, energy, and even money. It allows us to know accurately which errors are plaguing our pages and react immediately. To summarize, the majority of the updates aim to improve data accuracy in the reports. What do you think of the update to the Index Coverage Report? Let me know in the comments below!