How to Remove a URL of your Website on Google Search

In SEO, one of the ways to signify the growth of the website is to identify the amount of content that is added over time. However, there will be cases where you would want to remove some of your content from Google’s index.

URL removals from Google Search is a normal process and there are more ways than one to do it. It is not a complicated process but it is important to follow the process correctly to avoid negatively affecting your SEO.

In this article, I will take you through everything that you need to know how to remove a URL of a website that you own on Google Search.

When should you remove URLs from Google Search?

Duplicate Content

There may be occurrences where you accidentally publish duplicate content on your website. Removing the URL/s that have the duplicate content from Google Search avoids the risk of getting a penalty.

Outdated Content

If you have a website that has been running for a few years now, chances are there are some outdated content on your website such as old blog posts. Outdated content doesn’t even have to be aged content. It can also either be old product pages that are not available anymore or old promotions that have expired.

Hacked Content

If your website experienced a security breach and hacked content starts appearing on your website, chances are Google may still crawl them and index them. To avoid users reaching to these hacked pages, it is best to remove them from Google Search while cleaning up your website and buffing up your website security.

Private Content

If your website includes private content and should only be available to you and other people in your team, then you should remove them from the search results. If not, they will be accessible to the public and people may get access to confidential information.

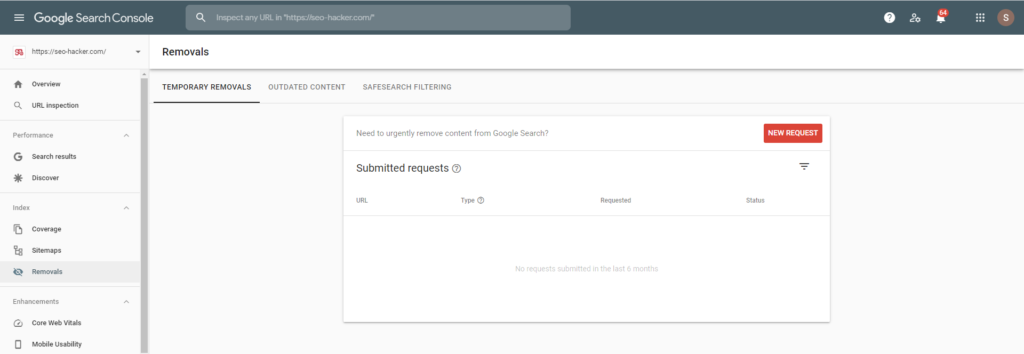

Temporary Removal Using Google Search Console Removals Tool

In Google Search Console, there is a tool called “Removals” under the indexing section that you could use to directly request a temporary removal of a specific or a list of URLs from Google’s index. Take note that the Google Search Console account you are using must be a verified owner of the website.

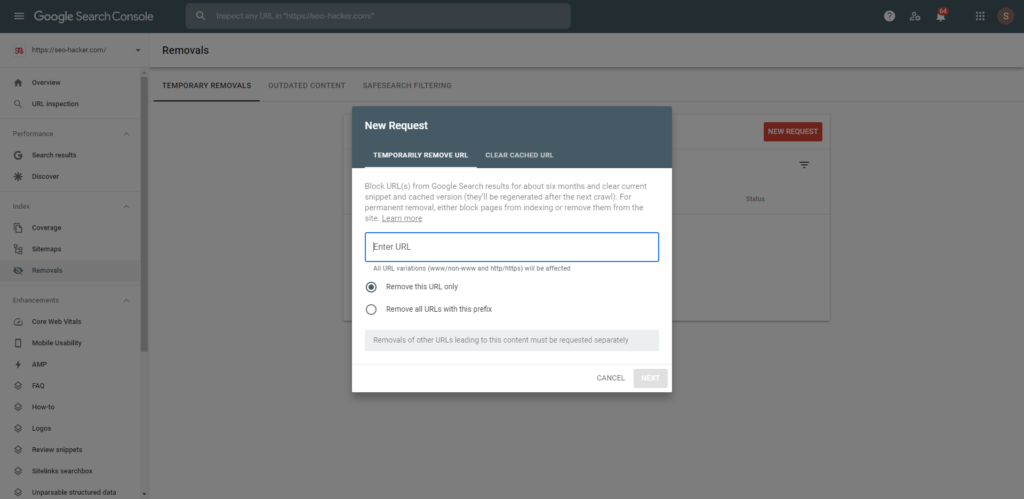

In the Removals tool, simply click on New Request and a box will appear. You can either request to remove a specific URL only or bulk URLs with a specific prefix. Unless you are removing thousands of URLs, I would highly recommend manually removing specific URLs because you may accidentally remove an important page from your website.

Using Google Search Console’s Removals Tool is only temporary. Once approved, the URLs will not be available in Google Search Results for a maximum of 6 months or until you request these URLs to be indexed again.

There is also another option called “Clear Cached URL” where instead of completely removing the URL from Google Search, it will only clear the snippet (title tag and meta description) and the cached version of the webpage until Google crawls it again.

There are two other sections in the Removals tool. Google allows users to report outdated content in search results or adult-only content. If users report webpages on your website, they will fall in one of these two:

User Reports Outdated Content

If a user sees content in Google search results that is non-existent anymore in a website, they can report it via the Remove Outdated Content tool. You will find the reported URLs in the Removals tool and their status.

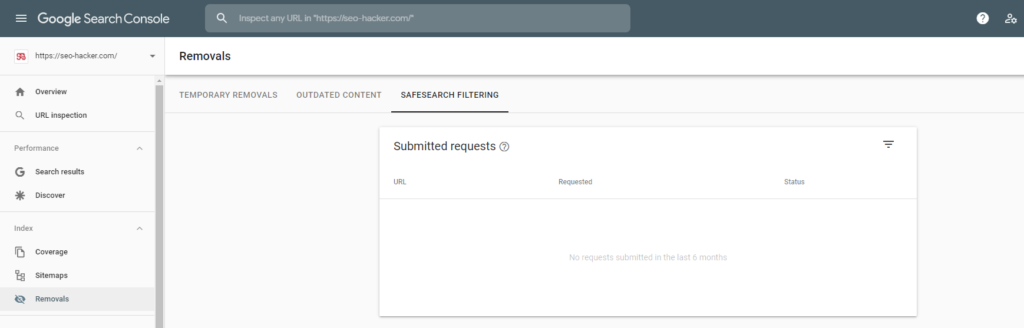

User Reports Sensitive Content

Users can report to Google if your website has sensitive or adult content. Reported URLs by users will be found under SafeSearch Filtering. If Google approves the report, the URLs that were reported will not appear in the search results if Safe Search is on.

If you think that the URLs reported by users on your website, whether it is for Outdated Content or Sensitive content, are unjust, you should seek help via Google’s Webmaster Forum.

Permanent URL Removal Methods

Delete the Content

If you want to completely remove a page from Google’s index, deleting the page to return a 404 error to Google is the first option you should look at. This is only if the page you would like to remove from Google search is completely unnecessary to your website anymore.

One of the most common myths in SEO is that having 404 errors on your website may hurt your SEO. Unless it’s your important pages, let’s say they were accidentally deleted, then it will hurt your website. However, 404s are completely natural and Google understands this.

After the page is taken down and Google crawls it, It may take a few days for a page that has been deleted to be removed from Google Search.

Noindex tag

If you want to remove a page from Google’s index but do not necessarily want to delete the page completely from your website, placing a meta robots noindex tag on the webpage is the way to go.

Here’s the code that should be found in the <head> section of the page you want to be removed:

<meta name=”robots” content=”noindex”>

Make sure that Google is able to crawl the noindexed page so don’t block it using the robots.txt file. And if you want to make sure that Google sees the noindex tag immediately, I would recommend including all the pages you placed a noindex tag on in the XML sitemap of your website.

After a few days, you will receive a Coverage error in your Google Search Console account “Submitted URL marked noindex”. This means Google has seen the noindex tag and you could now remove the URLs from your sitemap. This will expedite the process.

301 Redirect

You should only use this option if the URL you would like to remove from the search results has moved to a new URL. Using a 301 redirect will not directly remove the old URL from the search results but once Google has crawled the 301 redirects on the old URL, it will replace it with the new URL.

Use password protection

If you want to protect a specific webpage or a section of your website from appearing in the search results or to unauthorized users, you should set up a login system. You could also do IP whitelisting. This not only blocks Google and other search engines from crawling and indexing any content inside but also adds an extra layer of security for your website.

Common Mistakes

Blocking a URL from crawling using robots.txt

When you block a URL from being crawled in the robots.txt file, it only prevents Google from re-crawling the URL but it does not remove it from Google’s index.

Blocking with robots.txt after URL removal method

This might confuse some but all you have to remember is that once you removed a URL by doing one of the methods mentioned in this article, do not immediately block them in your robots.txt file. If you do, Google will be unable to crawl the stuff you did.

You can still use robots.txt but only use it once the URLs you’ve confirmed that the URLs were completely removed from Google Search. You can use the robots.txt file to make sure that they don’t get crawled and indexed again.

Adding a nofollow tag only

When using the meta noindex tag, you can add a nofollow after it. Using a noindex tag on a webpage only tells Google not to show it in the search results but Google can still crawl it and all the links in it. If you don’t want Google crawling the links, adding a nofollow tag after the noindex meta tag will tell Google to not crawl all the links in the webpage.

However, there are some who only use the nofollow tag but not use the noindex tag so make sure you check if you’ve place the right tag.

Key Takeaway

Removing URLs from Google Search may seem weird but do remember that this is a normal process and it will not necessarily hurt your SEO. Always make sure that the URL you are removing from Google’s index is not important anymore for your website to avoid losing traffic and revenue.