GoogleBot User Agent’s Update Rolls Out in December

GoogleBot optimization can be tricky especially since you cannot control how the spider perceives your site. As Google announced that they are going to update the user agent of GoogleBot come December, this is also a call for us SEOs to rethink how we can optimize for Google crawlers.

This update is a great signal on how Google values freshness because it regards that user-agent strings reflect newer browser versions. If they thought of a system for this, what more for content and user experience, right? So for those who are engaging in unethical practices like user-agent sniffing, I suggest sticking to white-hat practices and reap results from them.

What is a User Agent?

For the non-technical person, a user agent can be an alien term but what they don’t know is that they have been utilizing this every day that they explore the web. A user agent is responsible for connecting the user and the internet. Ultimately, you are part of that chain of communication if you are an SEO because it would be a great practice to optimize for user agents but not to the point that you exploit these types to turn them in your favor.

There are many User Agent types but we will just focus on the area that matters to SEO. User agents are put to work when a browser detects and loads a website. In this case, GoogleBot is the one to do this and it is mainly responsible for retrieving the content from sites in accordance with what the user requests from the web.

Simply put, the user agent helps in turning user behavior and action to commands. The user agent also takes the device, type of network, and the search engine into account so it can properly customize the user experience depending on intent.

How is the update going to change the way we optimize for crawlers?

Googlebots user agent string will all be periodically updated to match Chrome updates, which means that it will be at par with what browser version the user is currently using.

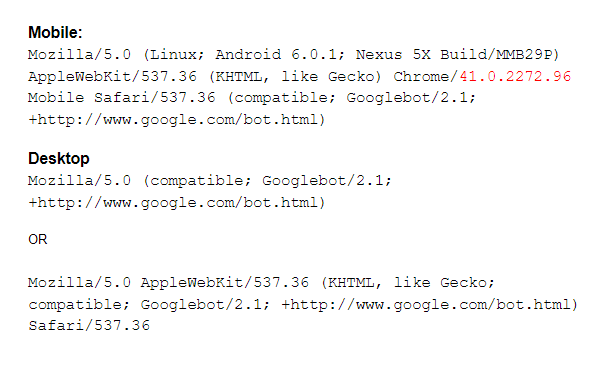

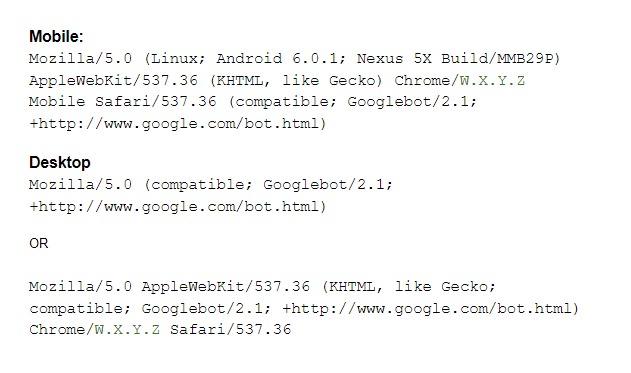

This is what Googlebot user agents look like today:

And this is how it will look like after the update:

Notice the slight change in the form of user agent strings, “W.X.Y.Z”? These strings will be substituted with the Chrome version we’re using. Google uses this for example, “instead of W.X.Y.Z, the user agent string will show something similar to “76.0.3809.100.” They also said that the version number will be updated on a regular basis.

How is this going to affect us? For now, Google says don’t fret. They expressed assurance and confidence that most websites will not be affected by this slight change. If you are optimizing in accordance with Google guidelines and recommendations then you don’t have anything to worry about. However, they stated that if you are looking for a specific user agent, you may be affected by the update.

What are the ways to optimize better for GoogleBot?

It would be better to use feature detection instead of being obsessed with detecting the user agent of your users. Google is kind enough to provide access to tools that can help us do this, like the Webmaster Tools which helps in optimizing your site.

Googlebot optimization is as simple as being vigilant about fixing errors on your site. This goes without saying that you shouldn’t over-optimize and that includes browser sniffing. Optimizing according to the web browser that a visitor is using becomes lazy work in the long run because it would mean that you are not holistic in your approach to optimizing sites.

The web is continually progressing which means that as webmasters, we have to think quickly on our feet on how to keep up with the software and algorithm updates. To do that, here are some ways that can help you succeed in Googlebot optimization.

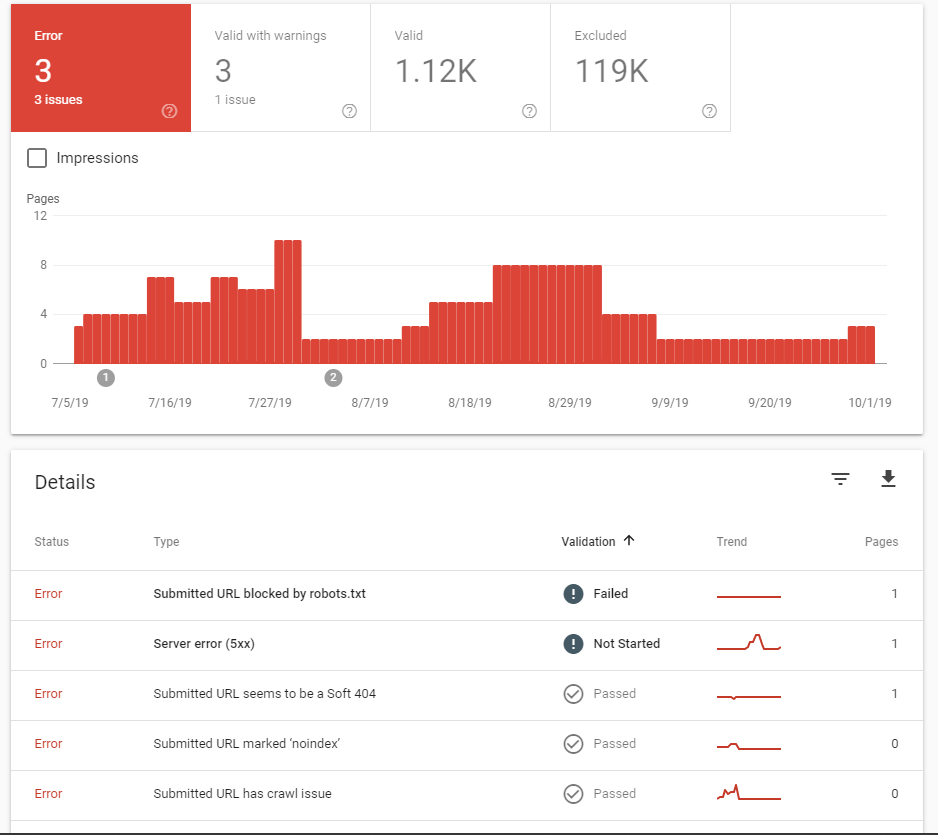

Fix Crawl Errors

Do not stress yourself out and guess the errors that affect your site. To find out if your site is performing well with crawler guidelines, read up on useful information to maximize the tools at your disposal. Your site’s crawl performance can be seen if you take a look at the Coverage error report in your Search Console because crawl issues are under this feature.

Looking at the way Google sees your site and alerts you on what you should fix within it is a surefire way to optimize for crawlers, not just for Google but for any search engine as well.

Do a Log File Analysis

This is also a great way to better optimize for crawlers because downloading your log files from your server can help you analyze how GoogleBot perceives your site. A Log File Analysis can help you understand your site’s strength in terms of content and crawl budget which will assure you that users are visiting the right pages. Specifically, these pages should be relevant both for the user and for the purpose of your site.

Most SEOs do not use a Log File analysis to improve their sites but with the update that Google is planning to roll out, I think it is high time that this becomes a standard for everyone in the industry.

There are many tools at your disposal that can help you find the information regarding the hits of your website whether it’s from a bot or a user which can help you in generating relevant content. With this, you can see how the search engine crawlers behave on your site and what information they deem necessary to store in a database.

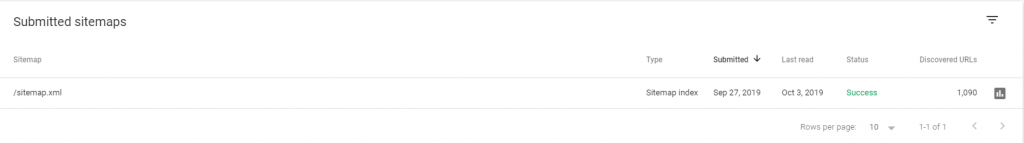

Optimize Sitemaps

It has been mentioned time and again that sitemaps do not put you at the forefront of the priority queue for crawling, but it does the job of helping you see what content is indexed for your site. A clean sitemap can do wonders for your site because it helps in improving user navigation and behavior as well.

The sitemap feature can help you test if your sitemap can be beneficial for your site or put it in jeopardy. Start optimizing your sitemaps and it will improve your site.

Utilize Inspect URL Feature

Utilize Inspect URL Feature

If you are particularly meticulous on how your site content is doing, then inspecting specific URLs does the trick of knowing where you can improve it.

The inspect URL feature gives you insight on a particular page on your site that would need improvements which can help you maintain your efforts for Googlebot optimization.

It can be as simple as finding no errors for that URL but sometimes, there are particular issues that you have to deal with head-on so you can fix the error associated with it.

Key Takeaway

With the Googlebot update comes another way to help us SEOs bring better user experience to site visitors. What you should also take note of are the common issues that Google has seen while evaluating the change in the user agent:

- Pages that present an error message instead of normal page contents. For example, a page may assume Googlebot is a user with an ad-blocker, and accidentally prevent it from accessing page contents.

- Pages that redirect to a roboted or noindex document.

In order to see if your site is affected by the change or not, you can opt to load your webpage in your browser using the Googlebot user agent update. Click here to know how you can override the user agent in Chrome and comment down below if you are one of the sites affected or not.