SEO Audit 2020: A Comprehensive Guide

Website optimization is made up of different facets that need to be optimized individually in order for your site to slowly reach the top spot in the first page of the search results. Onsite optimization – consisting of different factors that are inside your website need to be checked and optimized, offsite optimization – consists of different factors that deal with links that connect other websites to yours, and technical optimization – everything technical (codes and other factors that need IT expertise). All of these are important for your rankings and should not be disregarded. But the challenge is to find the pain points out of all these facets and fix them.

A website is a delicate object that needs constant maintenance and care from webmasters and SEOs. Our job is to create the most optimized site that contains useful, authoritative, and high-quality content that is able to assist users in their search queries and help them find what they’re looking for. So, how do we do that? We audit the site to find the broken facets and fix them accordingly. Here’s how:

SEO Audit Guide

- Check Website Traffic

- Check Google Search Console Coverage Report

- Check Submitted Sitemaps

- Check for Crawl Anomalies

- Check the SERPs

- Robots.txt Validation

- Onsite Diagnosis and Fix

- Pagination Audit

- XML Sitemap Check

- Geography/Location

- Structured Data Audit

- Internal Linking Audit

- Check Follow/Nofollow Links

- Check for Orphaned Pages

- SSL Audit

- Site Speed Test and Improvement

- AMP Setup and Errors

Check your Website Traffic

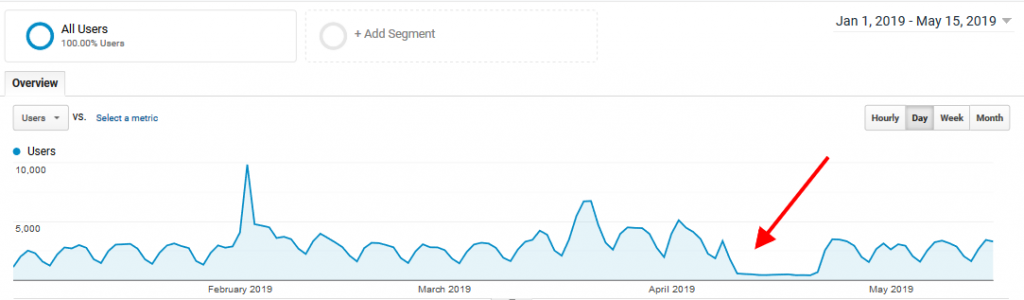

Traffic is a consequential effect of your SEO efforts. If you were able to improve your search visibility for a high volume keyword, you can almost be sure that your site’s traffic count will also increase. However, a drop in an otherwise steady number of traffic does not always mean that your search visibility took a drop as well. Take a look at this example:

We do a weekly checkup of our traffic count and once we saw the sudden drop, we knew something was wrong. The problem was, we didn’t do anything. I just published a new post and it suddenly became that way. I won’t go into how we investigated and fixed the cause of the drop, but this just goes to show how important it is to do a regular check of your traffic in Google Analytics. If we didn’t do the regular checks, then our traffic count might have just stayed that way until it becomes a crisis.

Make it a habit to regularly check your traffic count for the sole reason of you being on top of everything. I recommend doing this twice a week, if you can check it 4 times a week, then that would be best. This is an important foundation of your site audit and checking your traffic can never be disregarded. After checking your traffic, the next step is to:

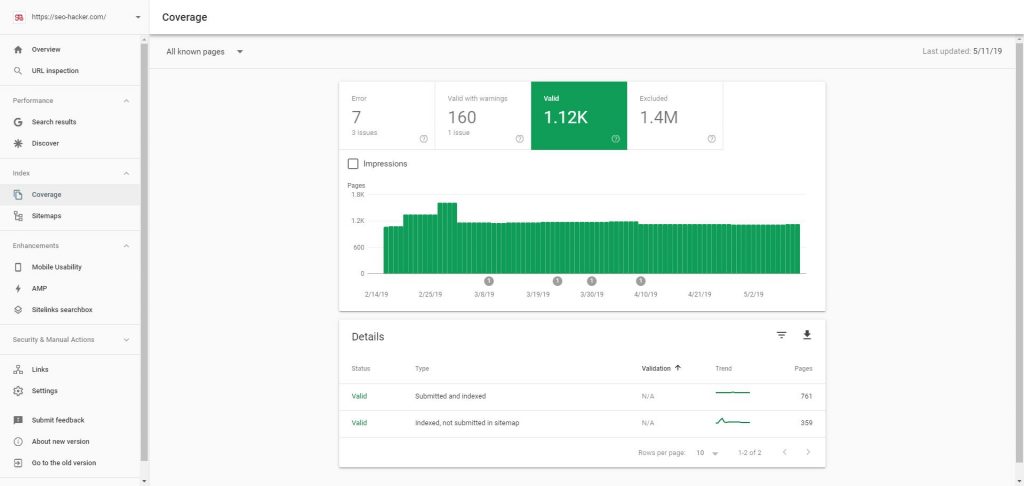

Check your Google Search Console Coverage Report

Google Search Console is probably the most crucial tool for SEOs and learning how to use it at its full extent is a must. As an SEO professional, it is important that the pages that you want Google to index are being shown in the search results and those that you don’t want to show should not appear in the SERPs.

Google Search Console’s Coverage Report is the best way to know how Google sees your pages and monitor the pages on your website that is being indexed. You would also be able to see crawling errors so you could fix them immediately.

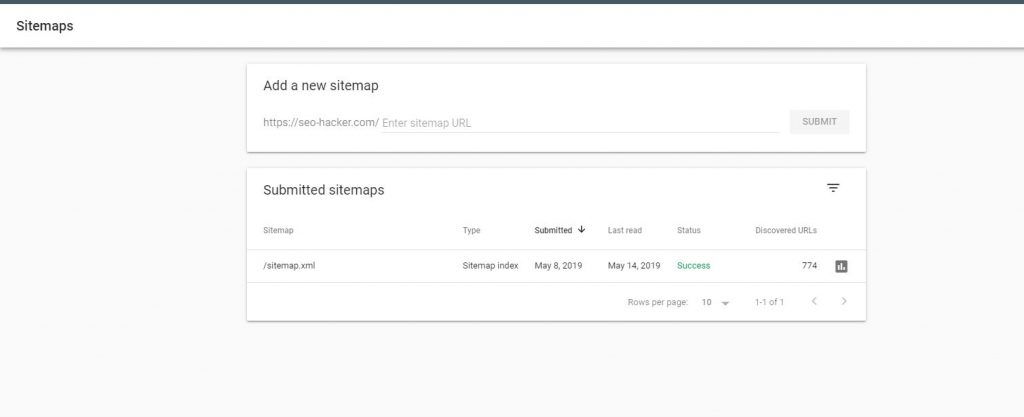

Check your Submitted Sitemaps

Since your sitemap contains all the URLs you want Google to crawl, you should make sure that all your sitemaps are submitted and crawled by Google. You could submit multiple sitemaps in Search Console but this decision should be based on the size of your website.

If you have less than a thousand pages, it would be better if you only have one sitemap. If your website is a travel booking website and has 50,000 pages or more, you could divide your sitemap to multiple sitemaps to better organize your URLs.

Take note that having more than one sitemap does not mean crawling priorities will change. It is only a great way of structuring your website and telling crawl bots what are the parts of your website that are important.

Submitted and Indexed

Under this report, you should see all the URLs that are in your sitemap. If you see URLs here that should not be indexed such as testing pages or images, you should place a noindex tag on them to tell crawl bots that this should not be shown in the search results.

Indexed, Not Submitted in Sitemap

Most of the time, this report shows pages that you don’t want users to see purposely in the search results. Even though you submitted a sitemap, Google could still crawl links that are not in your sitemap.

As much as possible, the number of pages indexed in this report should be kept at a minimum. If there are pages indexed that you don’t want users to purposely see, place a noindex tag on them. If you see important pages here, it would be much better if you could add them to your sitemap.

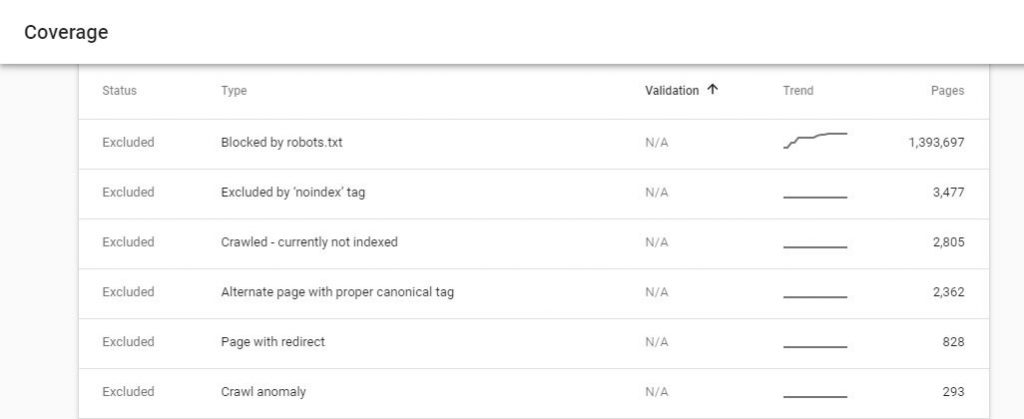

Check for Crawl Anomalies

In Google Search Console under the Coverage Report, you could also see pages that were crawled by Google but were excluded from the search results. This could be because of the noindex tag, robots.txt file, or other errors that might cause crawl anomalies and make a page non-indexable by Google.

You should check this report as you might have important pages that are under these. For pages that are under ‘Blocked by Robots.txt’ or ‘Excluded by Noindex tag’, fixes should be as easy as removing them from the robots.txt file or removing the noindex meta tag.

For important pages under ‘Crawl Anomaly’, you should check it using the URL Inspection tool of Search Console to see more details why Google is having problems crawling and indexing it.

If you don’t see any important pages here, there is no need for any further actions and let search console keep unimportant pages here.

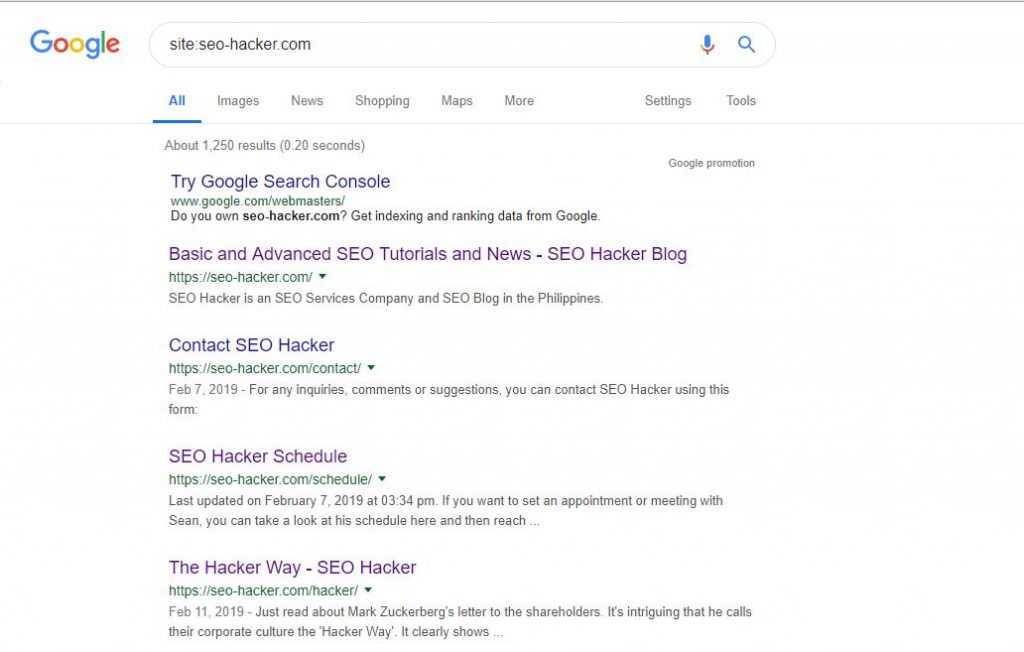

Check the SERPs

This is one of the most important things a lot of SEOs usually forget. A lot of people are busy doing link building and strategizing with their on-page SEO that they don’t check what their pages look in the search results.

To do this, go to Google search and use the advanced search command “site:” to show all the results Google has for your website. This is a great way of knowing how users see you in the search results.

Check for page titles that are too long or too short, have misspelled words or wrong grammar. Though meta descriptions are not used as a ranking factor by Google anymore, it is still a strong Click Through Rate factor and should still be optimized so make sure that meta descriptions of your pages are enticing for users.

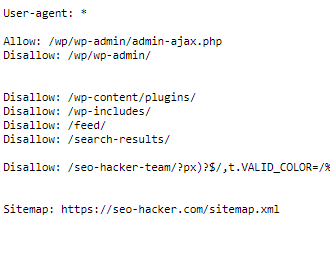

Robots.txt Validation

The robots.txt file, also called the robots exclusion protocol or standard, is what the search engine look for to determine which pages on your site to crawl. Robots.txt is vital to your SEO Audit since a slight misconfiguration or problem can cause a world of problems for you. What more if you totally neglect to check it for the audit?

Putting your robots.txt to the test by making sure that the search engine can properly access is one of the best SEO practices in the industry. If your robots.txt file is missing, chances are all of your publicly available pages would be crawled and added to their index.

To start, just add /robots.txt next to your URL.

If you haven’t created a robots.txt file already, you can use a plain text editor like Notepad for Windows or TextEdit for Mac. It is important that you use these basic programs because using Office tools could insert additional code into the text.

However, you can keep in mind that you can use any program that is in the .txt file type. Robots.txt can help you block URLs that are irrelevant for crawling. Blocking pages such as author pages, administrator credentials, or plugins among others, will help the search engine prioritize the more important pages on your site.

The search engine bot will see your robots.txt file first as it enters your site for crawling.

use programs like Microsoft Word, the program could insert additional code into the text. Of course, you shouldn’t miss out on the robots crawling directives such as user-agent, disallow, allow, and the sitemap.

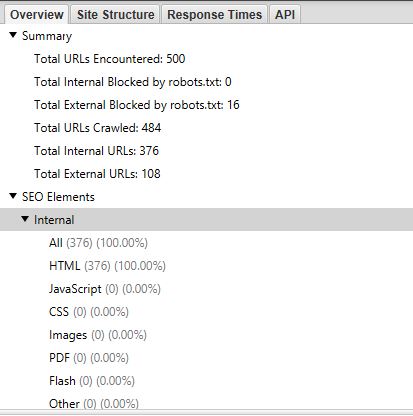

Onsite Diagnosis and Fix

After diagnosing your site through the different facets of the search engine (Google), it’s time for you to check your website as an entity. The tool we’ve always used to check on our site’s onsite status is Screaming Frog. We’ve always used it as the websites we handle grow larger as the months pass by. You set the parameters and it’s even capable of crawling/compiling outbound links to let you know if you have broken links. Here’s what the overview looks like:

Screaming Frog compiles all the different onsite factors and lists down errors that you might want/need to fix. Onsite factors that Screaming Frog shows are:

- Protocol – If your pages are HTTP or HTTPS

- Response codes – From Pages blocked by Robots.txt to server errors, these are all compiled and displayed by Screaming Frog

- URL – If your URLs contain underscores, uppercases, duplicates, etc.

- Page Titles – Everything that you need to know about your pages’ title tags

- Meta Description – If your pages are missing their meta descriptions, duplicate meta descs, the length of your meta descriptions, etc.

- Header Tags – Although Screaming Frog only compiles and displays H1s and H2s, these are already the most valuable aspect of your page structure.

- Images – Missing alt text, image size, etc.

- Canonicals

- Pagination

- And many more facets that are important for your SEO efforts.

Knowing the current state of your website’s pages is important since we are not perfect beings and we make the mistake of overlooking or forgetting to optimize one or two aspects of a page. So, use crawling tools like Screaming Frog to check the state of your pages.

Pagination Audit

Performing a pagination audit can affect your SEO efforts in your site because it deals heavily with how organized your pages are. Meaning, that task of pagination audit is done with the end goal of organizing sequential pages and making sure that these are all contextually connected. Not only is this helpful for site visitors, but it also projects a message to search engines that your pages have continuity.

Pagination is implemented for instances when you need to break content into multiple pages. This is especially useful for product descriptions used in eCommerce websites or a blog post series. Tying your content together will signal the search engine to think that your site is optimized enough to allow them to assign indexing properties to these set of pages.

How do you go about a Pagination? You have to simply place the attributes: rel=”prev” and rel=”next” in the head of each page in the series. Perform an audit by using an SEO Spider tool. While doing this, make sure that the attributes serve its purpose and that is to establish a relationship between the interconnected URLs that directs the user to the most relevant content that they need.

Pagination Audit should not be amiss since this maximizes the content in your site, allowing users to have a great experience in digesting these chunks of information. It is also very useful in increasing efforts for the navigation throughout the page.

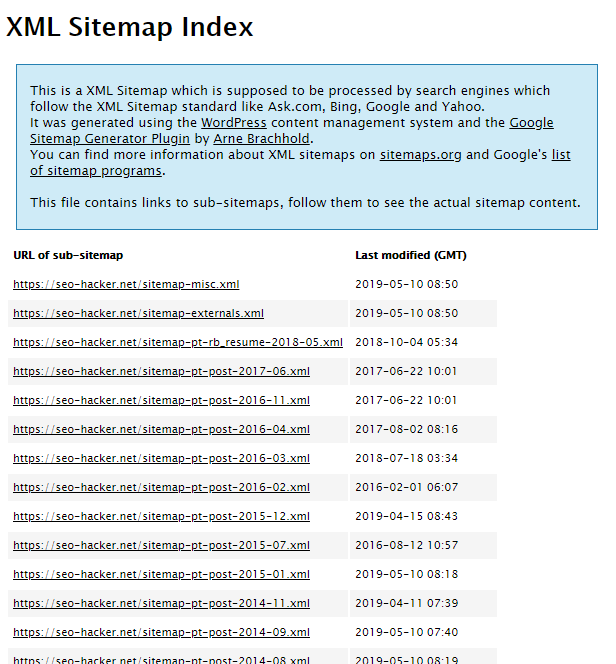

XML Sitemap Check

XML sitemaps are especially useful because it lists your site’s most important pages, allowing the search engine to crawl them all and increase understanding on your website’s structure. Webmasters use the XML Sitemap to highlight the pages on their sites that are available for crawling. This XML file lists URLs together with additional meta-data about each of these links.

A proper SEO audit guide should always include the XML Sitemap Check because doing so will guarantee that User Experience always lands on a positive note. For you to make sure that the search engine finds your XML sitemap, you need to add it to your Google Search Console account. Click the ‘Sitemaps’ section and see if your XML sitemap is already listed there. If not, immediately add it on your console.

To check your sitemap for errors, use Screamingfrog to configure it. Open the tool and select List mode. Insert the URL of your sitemap.xml to the tool by uploading it, then selecting the option for “Download sitemap”. Screamingfrog will then confirm the URLs that are found within the sitemap file. Start crawling and once done, export the data to CSV or sort it by Status Code. This will highlight errors or other potential problems that you should head on out and fix immediately.

Geography/Location

Google places importance on delivering useful, relevant, and informative results to their users, so location is an important factor for the results that they display. If you search for “pest control Philippines”, Google will give you pest control companies in the Philippines – not pest control companies in Australia or any other part of the world.

ccTLD plays a role in stating which specific search market/location your site wants to rank in. Some examples of ccTLD would be websites ending in .ph, .au, etc. instead of the more neutral .com. If your website is example.ph, then you can expect that you’ll rank for Google.com.ph and you’ll have a hard time ranking for international search engines like Google.com.au. If you have TLDs that are neutral (.com, .org, or .net), then Google will determine the country where you can be displayed based on the content you publish in your site and the locations of your inbound links.

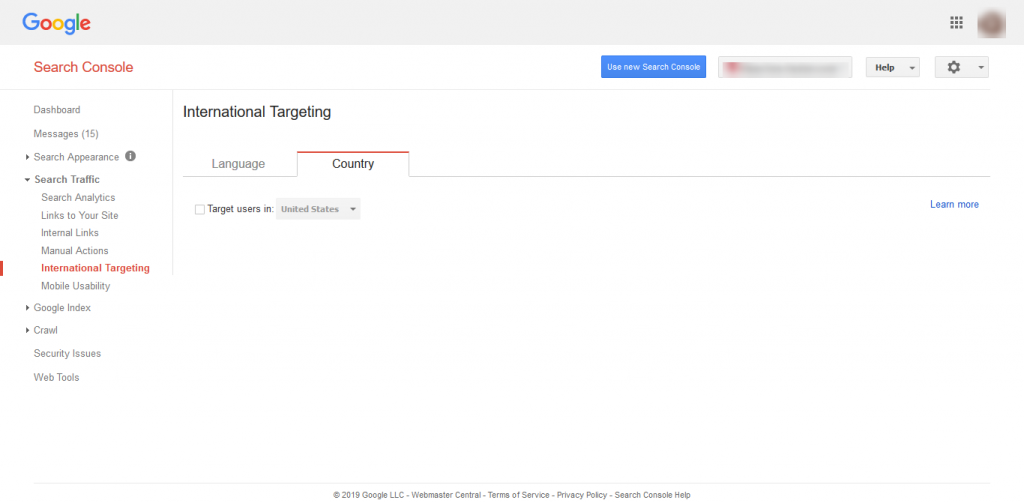

If you already have a target country in mind but you have a neutral TLD like .com, you can set it your website’s target country manually in Google Search Console. Here’s how

Go to the old version of Google Search Console → Click on Search Traffic → Then click on International Targeting → Manually set your target country

This is what it should look like:

Note that if you have a ccTLD, you won’t be able to set your target country and this option is only available for websites that have a neutral TLD.

Structured Data Audit

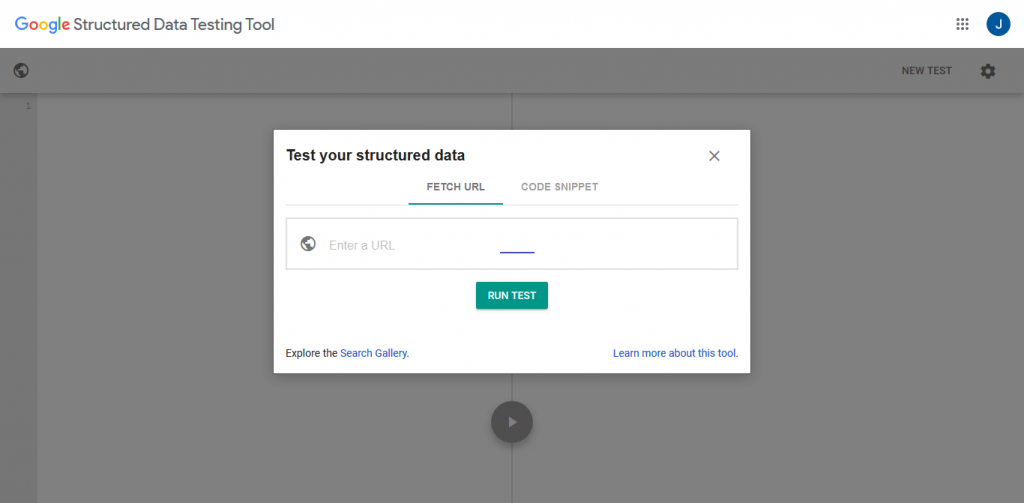

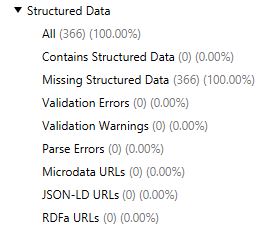

We all know that Google is constantly improving their algorithms to better understand content, semantics, and the purpose of websites. This is where structured data shines since it indicates or marks up specific entities in your pages to help Google and other search engines better understand it.

The most common format of structured data used by webmasters around the world comes from schema.org. After indicating the necessary entities in your structured data, you can use Google’s Structured Data Testing Tool to check if your structured data has any errors. Here’s what it looks like:

After you’ve applied your structured data, you can use Screaming Frog to crawl your website and check if there any errors in the application or you can check which pages don’t have any existing structured data.

After finding the errors, apply the necessary fixes.

Internal Linking Audit

Internal links are one of the most important ingredients of SEO. The pages should appear connected, otherwise, it would just be a waste of a domain. Internal Linking Audit highlights user experience as its primary goal since the connections between pages may cause your site performance to falter. As your website is an interconnection of pages, you have to determine the most valuable content that you want the user to visit. This is why linking to these types of pages are one of the most important optimization tactics you can do.

Internal Links can spell the difference between leads that you can encourage to convert to a customer. Internal Linking Audit should make you well-versed in determining which URLs are relevant to your site. Check it by making sure that you are linking to the correct page version, most of which are in an HTTP/HTTPS format. Link on absolute URLs and do not forget to link to canonical page versions.

Check Follow/Nofollow Links

Dofollow and nofollow links have been the subject of debate in the SEO industry for a long time now. There are those who say that it is detrimental to SEO efforts and another side who promotes its importance for search engines. We stand with the latter.

A nofollow link will look like this:

<a href=”http://www.example.com/” rel=”nofollow”>Anchor Text</a>

This will instruct search engines to avoid this specific link. The attributes found above helps define the relationship that a page or content has with the link it is tagged with. Nofollow links are mostly used in blogs or forum comment because this deems spammers powerless. This was created to make sure that inserting links is not abused by those who buy links or sell them for their gain. As a webmaster, it is your job to check your pages for these links. Inspect the code and see if the links are tagged with its corresponding follow or nofollow attribute.

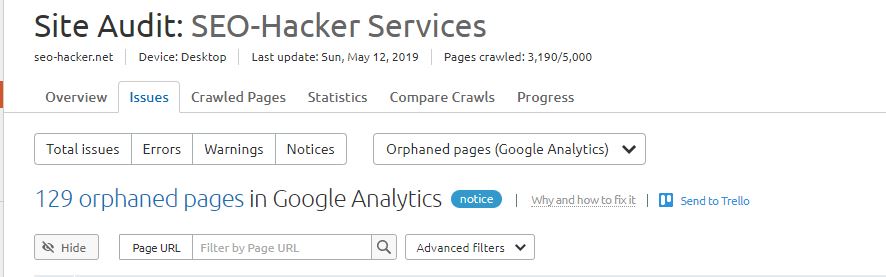

Check for Orphaned Pages

Orphaned pages are a big issue. These are pages that have no internal links toward them. Since bots crawl websites through links, orphaned pages would most likely be not found by crawl bots.

To check for orphaned pages, you could use SEMRush Audit. This should appear in the Issues report of the site audit but you will only have access to this report if your SEMRush account is connected to your Google Analytics account.

If orphaned pages are found in your website, you should check if these pages have importance. If yes, augmenting it and linking to it internally should be next step. If not, redirecting it or deleting the page should be enough.

SSL Audit

Security for users is one of Google’s priority. In 2014, HTTPS was announced as a ranking factor by Google

Secure Sockets Layer or better known as SSL, certifies that the user’s browser connects to a web server is encrypted and private. Getting an SSL certificate is easy. You could buy it online, activate it, then install it.

If you already have an SSL, make sure that you frequently check the status of it. You could use SSL shopper or other SSL checkers online.

Site Speed Test and Improvement

Site speed is a major ranking factor. Not only does slow site speed affect rankings, but it also affects user experience too, which is another ranking factor.

There are a lot of site speed analysis tools online. Google’s PageSpeed Insights is a great free tool to analyze your website. You should take note that website page speed scores are different for mobile and desktop.

Even if your website loads fast for desktop users, it might not be the same case with mobile users. Improvements for either is different as well. Make sure that your website is optimized for both.</p>

AMP Setup and Errors

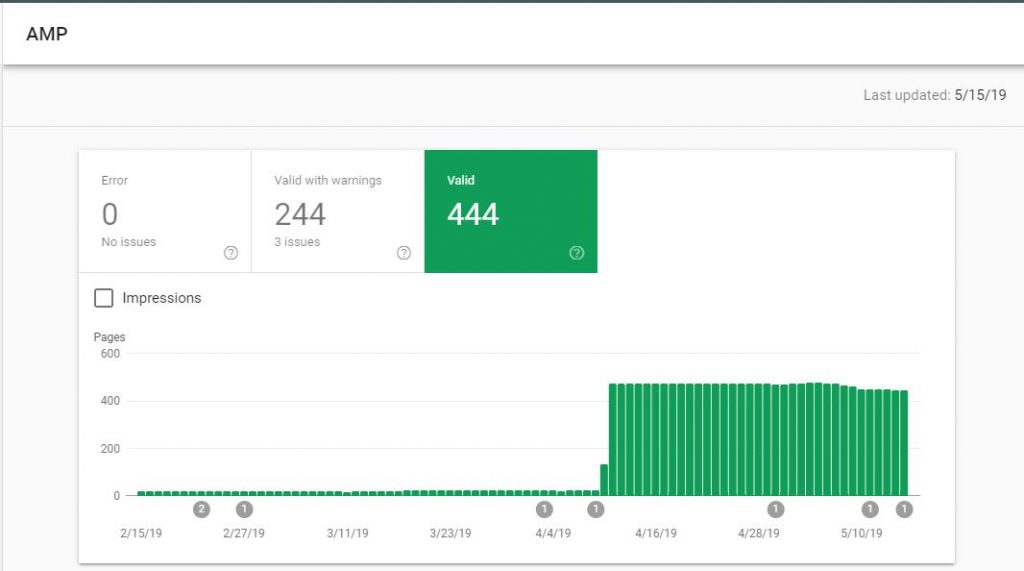

AMP is a great and easy way to make your website mobile friendly. Creating AMP pages are as easy as installing the AMP Project’s plugin if you are using a WordPress website. It automatically creates AMP versions of your selected pages.

If your website has AMP pages, Search Console will provide you a report for AMP pages they crawled in your website. You could check it under Enhancements.

To manually check how your pages look in AMP version, just add /amp at the end of any URL that you have. You could do this even if you are using a desktop.

Key Takeaway

In order to stay afloat in the world of SEO, you have to be mindful enough to perform all of the optimization practices found here. If you fail to do so, you are not going to harvest the growth that you seek. Might as well just give up on being a webmaster and be something else entirely.

There is a myriad of search algorithm updates, erratic market trends, increase in competition, among other things, all the more reason for you to be always on the move. With the help of the different tools that you can easily access with just a google searc>h away, all of these can be done in a snap. If you are committed to these practices, SEO ranking would just be a light feather on your workload.

Is your website optimized well? What are the other SEO elements that you review on the daily?